SDC#8 - Database Replication Under the Hood

Discord's Trillion Message Problem and More...

Hello, this is Saurabh…👋

Welcome to the 65 new subscribers who have joined us since last week.

If you aren’t subscribed yet, join 800+ curious developers looking to expand their knowledge by subscribing to this newsletter.

In this edition, I cover the following topics:

🖥 System Design Concept → Database Replication Under the Hood

🧰 Case Study → How Discord Dealt with Trillions of Messages?

🍔 Food For Thought → 8 Tips to Approach a System Design Interview

So, let’s dive in.

🖥 Database Replication Under the Hood

Database replication isn’t magic.

I did touch upon this topic in an earlier post about making your databases highly available with replication.

However, behind the scenes, there are different replication methods and techniques at play.

Let’s look at a few important ones:

1 - Statement-based Replication

Statement-based replication is one of the oldest techniques of replication.

It was used by databases like MySQL. But over the years, it has largely fallen out of favor.

What was so bad about it?

Let’s say you are going for leader-based replication and there are one or more follower nodes under a single leader node.

With Statement-based Replication, two things happen:

The leader will log every write request or statement that it executes.

It will also send that statement log to its followers.

This means that every INSERT, UPDATE & DELETE statement is forwarded to followers.

Followers parse and execute the SQL statement as if they had received it directly from a client.

Here’s what it looks like:

This mechanism had a few advantages:

Highly efficient in terms of network bandwidth because only SQL statements are sent to the followers rather than the complete data

Higher portability across different database versions.

Simpler and easier to use.

But as I said earlier, it fell out of favor because of some big limitations:

Any statement that calls a non-deterministic function (such as NOW() & UUID()) to get the current date and time will generate a different value on each replica.

If statements use an auto-incrementing column, they must be executed in the same order on each replica. In other words, you can’t have multiple transactions executing concurrently.

Statements with side effects such as triggers or stored procedures can create unforeseen effects on the replica.

2 - Shipping the Write-Ahead Log (WAL)

The write-ahead log is an append-only sequence of bytes that contains all writes to the database.

Databases create a write-ahead log for their own purposes to safeguard against potential crashes. However, this log can also be shipped to the followers.

By shipping the write-ahead log or WAL to another node, it’s possible to build an exact replica of the data.

The leader node ships its own log to the followers and the followers process this log and build a copy of the exact same data structures as found on the leader.

This method can be used in PostgreSQL and other databases but it has one glaring disadvantage.

The write-ahead log describes the data at a very low level. Basically, it contains details of which bytes were changed in which particular disk blocks. In other words, it depends on the exact storage engine to make sense.

However, if a database changes its storage format from one version to another, you can’t use the same log as it might lead to unpredictable results.

The operational impact of this is that you cannot implement a zero-downtime upgrade.

3 - Row-Based Replication

The third approach i.e. row-based replication gets around the issue with write-ahead logs by using different log formats for replication and storage engine.

It does so by creating a logical log - a sequence of records showing all the writes to the database tables in row format.

Here’s how it works:

For an insert operation, the log contains the new values of all the columns.

For a delete operation, the log contains information to uniquely identify the deleted row such as the primary key.

For an updated row, the log contains information to uniquely identify the updated row and the new values of all the modified columns.

In case multiple rows are impacted by an update operation, several log records are generated.

See the below illustration that shows the row-based replication approach.

Since the row-based replication is not tightly coupled with the storage engine, it can support backward compatibility across database versions.

In other words, leaders and followers can potentially run different versions of the database.

🧰 How Discord Dealt with Trillions of Messages?

Discord is one of the most popular places to talk and hang out on the Internet.

In 2017, Discord was storing billions of messages from users all across the globe. At that point, they migrated from MongoDB to Cassandra in search of a scalable, fault-tolerant and low-maintenance database.

Over the next few years, Discord’s database cluster grew from 12 Cassandra nodes to 177 Cassandra nodes storing trillions of messages.

They had chosen Cassandra to be the database that would support Discord’s continuous growth. However, it didn’t turn out to be the case.

The Cassandra cluster ran into serious performance issues requiring lots of effort to run and maintain resulting in frequent on-call issues, unpredictable latency and expensive maintenance operations.

The Reasons for Discord’s Database Problems

So, what was causing these problems?

There were a few reasons as follows:

Reason#1 - Partitioning Strategy

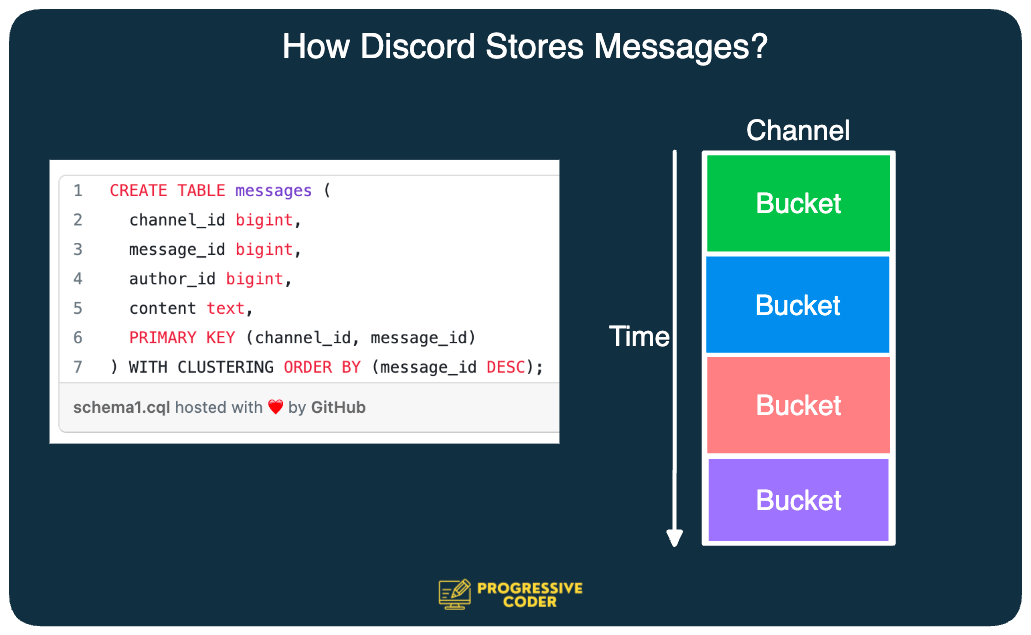

In Discord, messages belong to a channel and are stored in a table known as messages.

This table was partitioned by the channel_id along with a bucket. The bucket is basically a static time window.

Here’s a pictorial representation of the same.

Such a strategy meant that all messages for a given channel and bucket (time period) would be stored on the same partition.

The implication of this was that a Discord server with hundreds of thousands of people could potentially generate enough messages in a short period of time to overwhelm a particular partition. This was a big performance pitfall.

See the below illustration:

Reason#2 - Cassandra Reads More Expensive Than Writes

By design, reads in Cassandra are more expensive than writes.

This is because writes are appended to a commit log and written to an in-memory structure called a memtable. Periodically, the memtable is flushed to the disk in the form of SSTable.

When you are writing new records, this doesn’t cause issues.

However, read operations need to potentially query the memtable and multiple SSTables. This is an expensive operation and lots of concurrent reads in high-volume Discord channels can eventually hotspot a partition.

Check the below illustration depicting a “hot partition”.

These hot partitions created cascading latency issues across the entire database cluster.

A surge in traffic for one channel and bucket pair led to increased latency in a node as it tried harder to keep up and serve the incoming traffic. Other queries to this node also got affected resulting in broader end-user impact.

Reason#3 - Trouble with Cluster Maintenance

Over a period of time, Cassandra compacts SSTables on disk to improve the performance of read operations. However, during the compaction period, the ongoing read operations suffered even more.

To mitigate this situation, the team was forced to take the node undergoing compaction out of rotation so that it didn’t get traffic and bring it back into the cluster to resume activities.

The Solution

Ultimately, the team at Discord decided to undertake a massive project to transform the architecture.

There were two major aspects to the overall solution:

1 - Migration to ScyllaDB

As part of this project, a decision was made to replace Cassandra with ScyllaDB.

Why ScyllaDB?

It was Cassandra compatible

Written in C++ rather than Java

It provided better workload isolation via its shard-per-core architecture

No garbage collection

Special support by the ScyllaDB team

Based on the documentation released, the main reason for choosing ScyllaDB appeared to be the lack of a garbage collector.

The Discord team had faced many issues with Cassandra’s garbage collector.

GC pauses affected the latency in a big way. In fact, some consecutive GC pauses forced an operator to manually reboot and babysit the node back to health.

2 - Building Dedicated Data Services

During their experiences with Cassandra, the Discord team struggled a lot with hot partitions.

High traffic to a given partition resulted in uncontrolled concurrency resulting in cascading latency. Therefore, it became necessary to control the amount of concurrent traffic to hot partitions in order to protect the database from being overwhelmed.

To handle this, the team wrote special data services using Rust.

These were basically intermediary services that sat between the API monolith and the database cluster. Rust was chosen because of team familiarity and the promise of C/C++ speeds without sacrificing safety.

Each of the data services contained roughly one gRPC endpoint per database query and no business logic.

But the real magic of these data services was request coalescing.

What is request coalescing?

If multiple users are requesting the same row at the same time, request coalescing helps query the database only once.

Here’s what happens:

The first user that makes a request causes a worker task to spin up in the service.

Subsequent requests will check for the existence of that task and subscribe to it.

Once the worker task queries the database and gets the result, it will return the row to all subscribers.

See the below illustration:

To make things even better, the Discord team also implemented consistent hash-based routing for the Data Services to enable more effective coalescing.

They used a routing key (channel ID) for each request to the data service. In other words, all requests for a particular channel go to the same instance of the data service reducing the load on the database even further.

Testing the Migration

The goal of the migration to the new ScyllaDB cluster was simple - no downtime.

To make things faster, the Discord team extended the data service library to perform large-scale data migrations. The migrator was written in Rust and once it was turned on, the migration of messages took place at a speed of 3.2 million per second.

It took a few days but ultimately, everything was migrated over to ScyllaDB and full production traffic was shifted over to the new cluster.

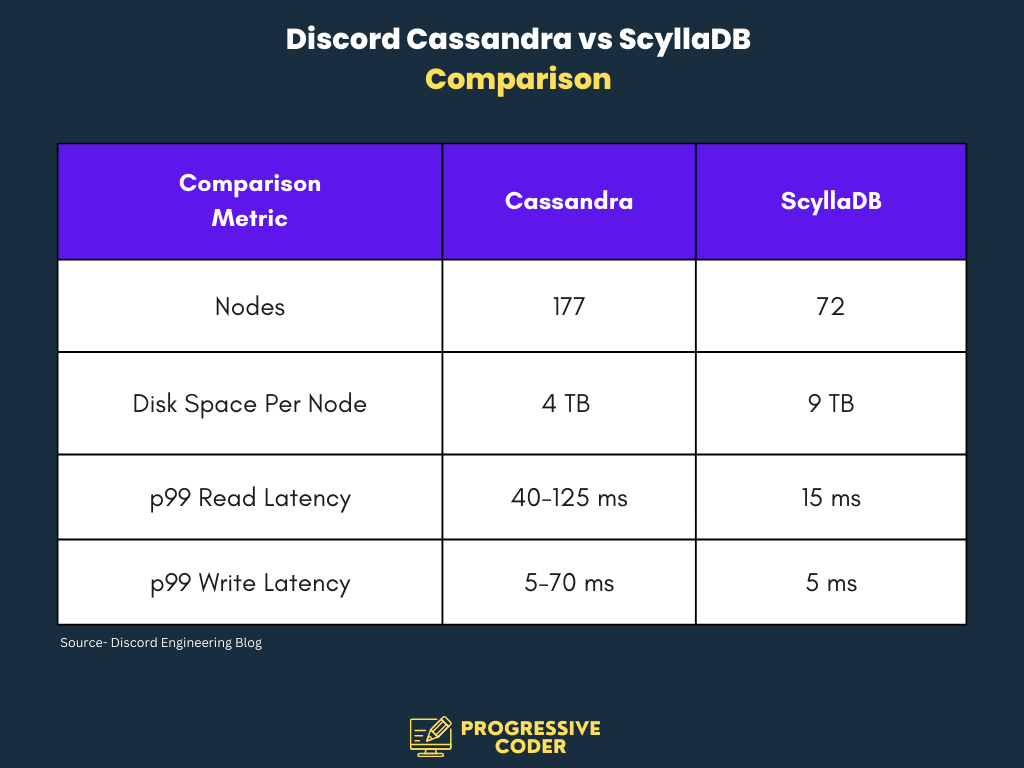

Here’s a chart that shows some stats comparing the old Cassandra cluster with the new ScyllaDB cluster.

However, the real test of the entire migration came during the finals of the FIFA World Cup in 2022.

People from all over the world tuned in to watch the World Cup and a huge number of messages were recorded on the Discord servers as people discussed the events of the match in real time

As per the testing by the Discord engineering team, they found the entire platform holding up incredibly well. The database didn’t break a sweat even during some of the spikes during the roller-coaster match.

Learnings

So what are some of the learnings we can take from Discord’s case study?

Here are a few:

The partitioning strategy plays a crucial role in the overall database performance. In the case of Discord, a combination of channel ID and time bucket along with usage pattern led to the problem of “hot partitions”.

Reads and writes can have totally different performance levels depending on the database.

Cluster maintenance is a huge drag on resources and system’s performance.

Latency is a holistic topic. Just swapping databases may not be enough. In the case of Discord, they also built new data services to reduce the burden on databases.

P.S. This post is inspired by the explanation provided on the Discord Engineering Blog. You can find the original article over here.

🍔 Food For Thought

👉 How to approach a System Design Interview?

System Design interviews have become more and more important over the last few years.

In fact, as AI tools become more popular and lower-level programming becomes a lot easier, a good knowledge of System Design has the potential to differentiate you from the rest.

Here is a post on X (Twitter) where I summarized the 8 tips to approach a System Design interview the right way. As of writing this, the post has received 140+ likes.

Link to the post below:

https://x.com/ProgressiveCod2/status/1701195912062599548?s=20

👉 How to be more productive as a developer?

“Do you ever have one of those days when you end up exhausted but can't list the things you finished?”

We’ve all been there at some point and most of the time, we don’t know what to do.

But Raul asked that question and also came up with a solution from his own experience that can help a lot of developers out there.

Check out this amazing post by Raul 👇

Link to the post below:

https://x.com/RaulJuncoV/status/1704483708667879760?s=20

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues

See you later with another value-packed edition — Saurabh.

Duplicate Requests can be served from Cache as well. Why don't they use cache? Is it because Scylla DB is fast?