Agoda uses Kafka to transfer hundreds of terabytes of data across various supply systems every day. It’s an indispensable part of their tech stack.

As you might know, Agoda’s goal is to aggregate and provide the best prices from various external suppliers (hotels, restaurants, cab providers, etc). To ensure that customers always get the most up-to-date and accurate pricing information, Agoda’s supply system needs to process a massive amount of price updates from the suppliers in real time.

For example, a single supplier can provide 1.5 million price updates and offer details in just one minute. Any delays or failures in reflecting these updates can lead to incorrect pricing and booking failures.

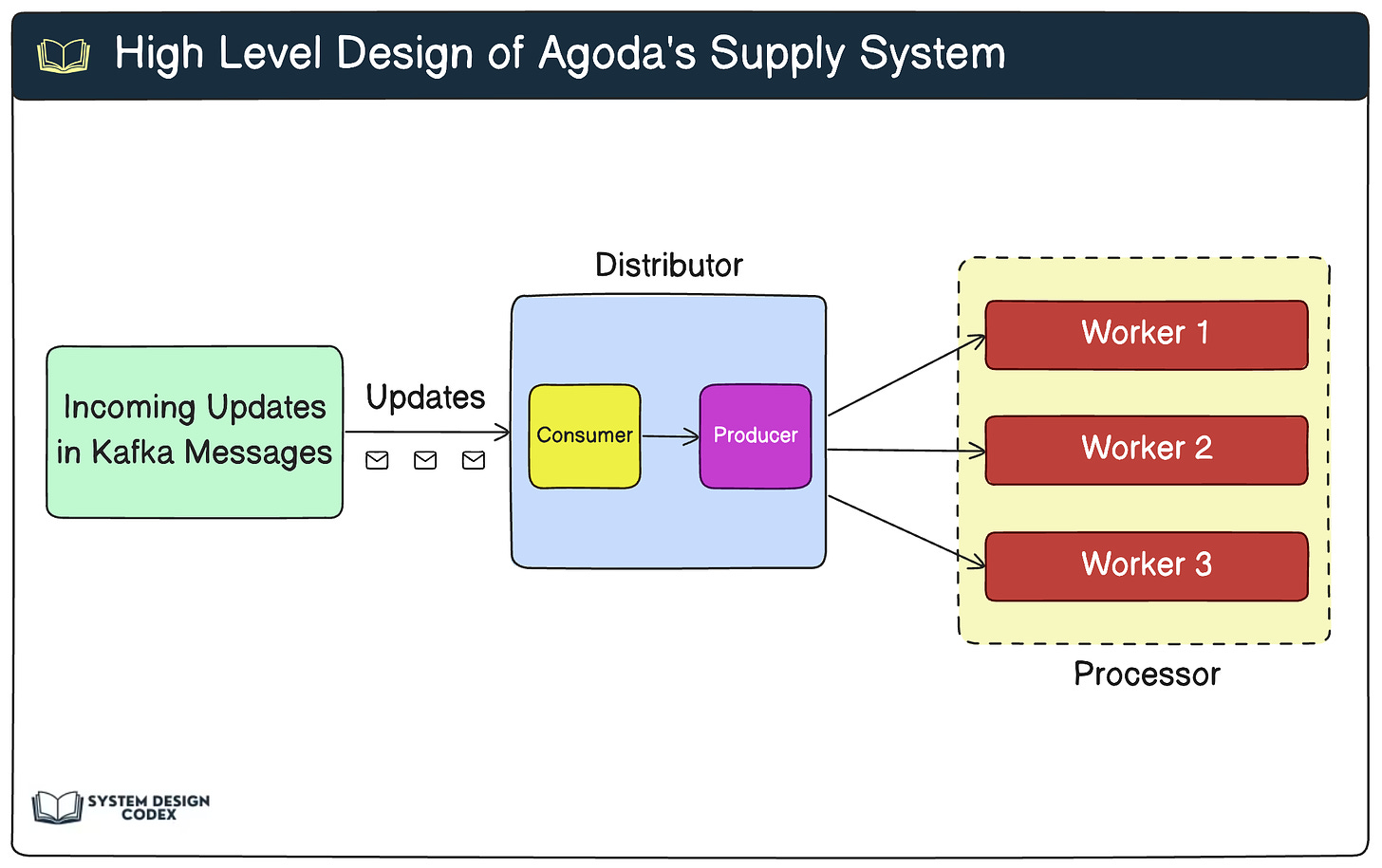

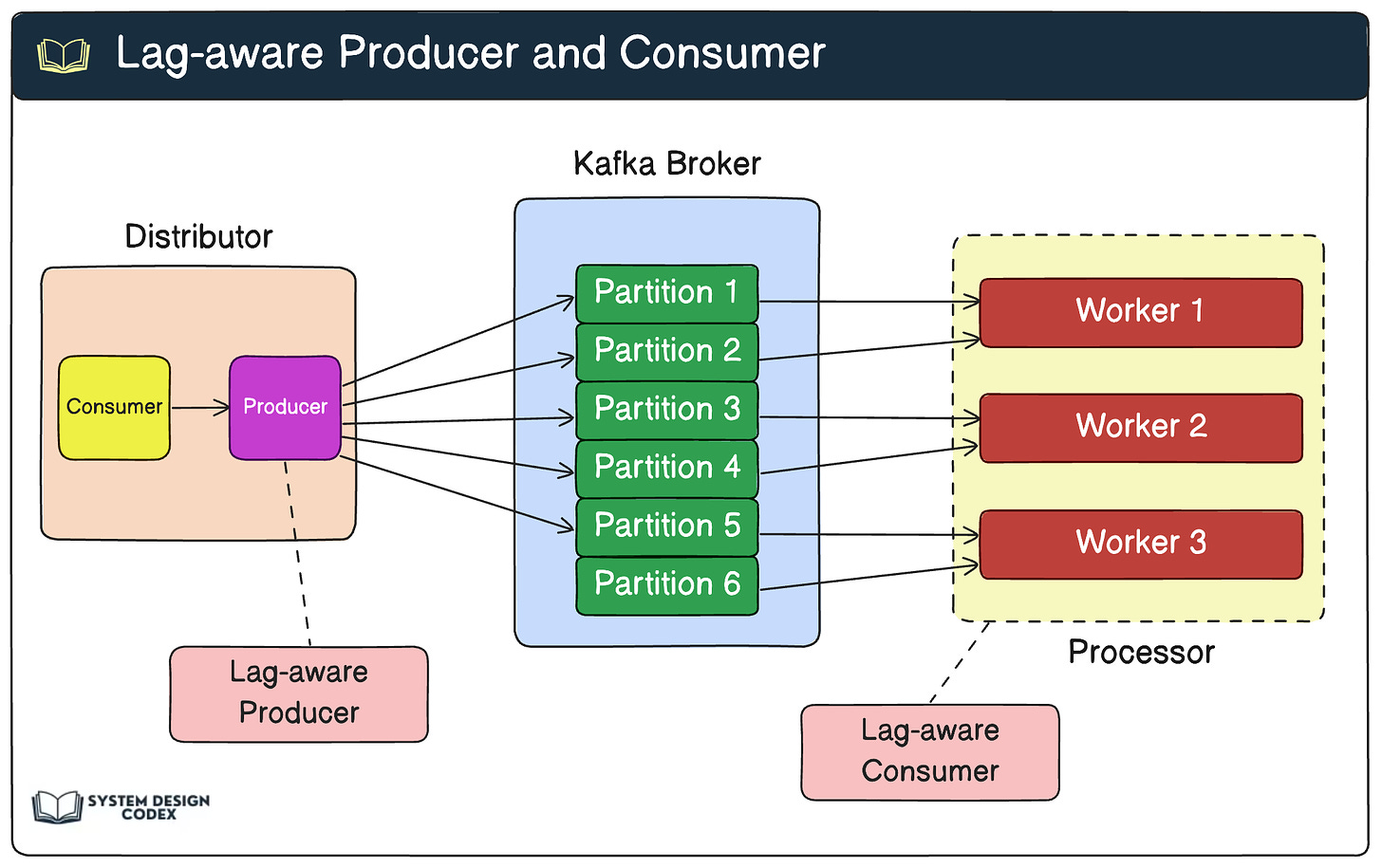

Here’s how the typical Kafka application setup within Agoda’s supply system looks like:

The key components are as follows:

Distributor: The component consumes price updates from various 3rd party systems (suppliers) and distributes processing jobs to the downstream processor service across multiple data centers.

Processor: The processor service is responsible for transforming, processing, and delivering the updates to the downstream services and databases.

Kafka: It acts as the messaging backbone between the distributor and processor components.

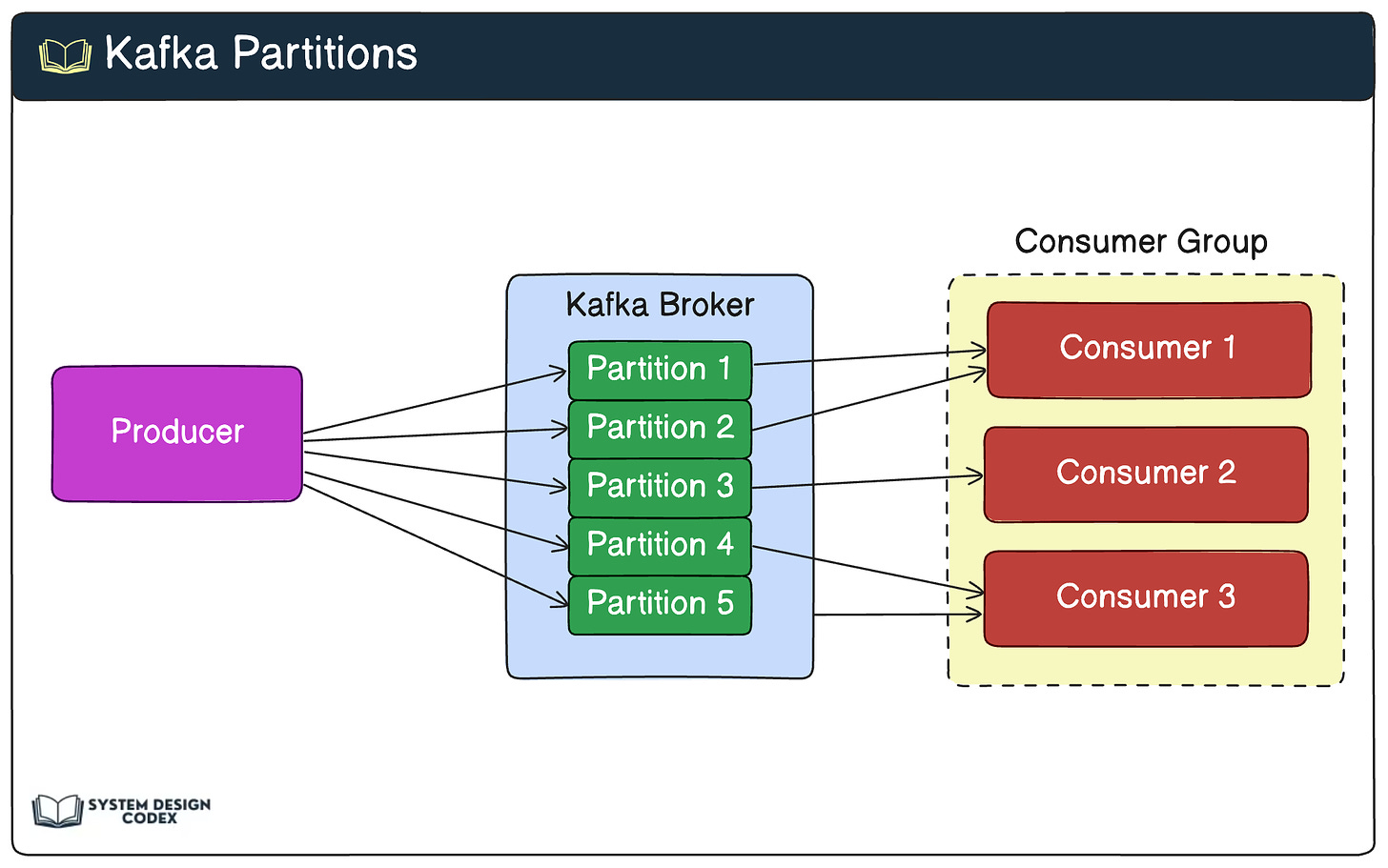

One of the key features of Kafka that makes it suitable for such large-scale data processing is the use of partitions.

Partitions help Kafka achieve parallelism by distributing the workload across multiple partitions and consumers. In other words, it can process messages faster and more efficiently.

However, Agoda’s online travel booking platform had some challenges that were made more apparent by Kafka’s parallelism.

Partitioner and Assignor Strategy

The partitioner and assignor strategies in Kafka play a crucial role in determining how messages (price updates from suppliers) are distributed across partitions and consumers.

Partitioner: Determines how messages are distributed across partitions when they are produced. For example, round-robin strategy and sticky partitioning.

Assignor: Determines how partitions are assigned to consumers within a consumer group. For example, range assigner and round robin assigner.

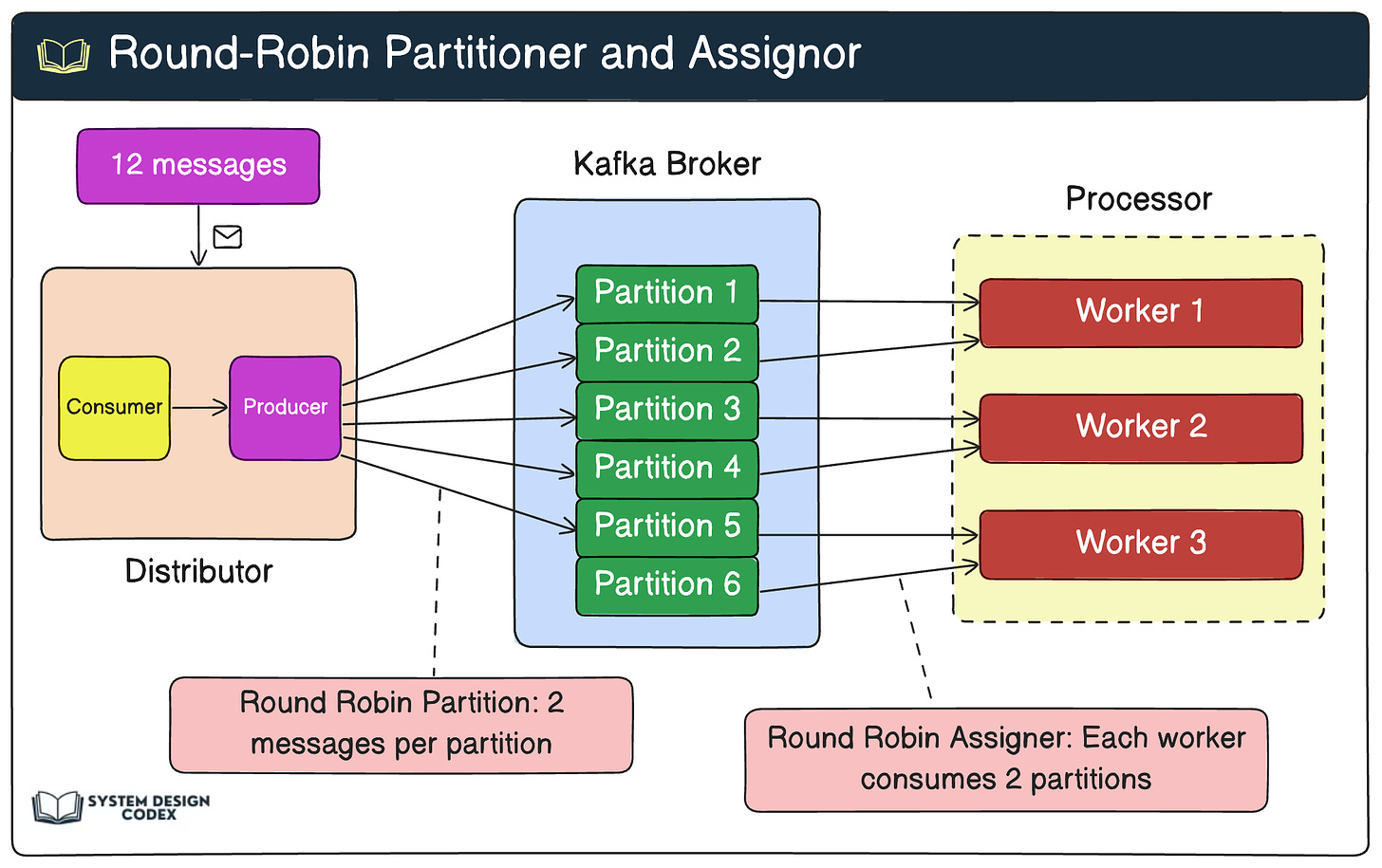

See the diagram below for a round-robin partitioner and round-robin assignor strategy:

Traditionally, these strategies were designed to work under the assumptions of homogenous consumer processing capabilities and uniform message workloads.

However, in Agoda’s case, these assumptions didn’t hold true leading to load-balancing challenges related to Kafka.

Challenges

The two main challenges are as follows:

Heterogenous Hardware: Agoda uses a private cloud with Kubernetes which can lead to pods running on servers of different hardware generations. Benchmarks show significant performance differences between hardware generations.

Uneven Workload: Messages may require different processing steps, leading to varying processing times. For example, processing certain types of messages may require calling 3rd party APIs and executing database queries, resulting in latency fluctuations.

These challenges led to the over-provisioning problem.

The Over-provisioning Problem At Agoda

Agoda initially used the Kafka round-robin partitioner and assigner to distribute the same number of messages to each partition and pod.

However, this led to over-provisioning.

Over-provisioning involves allocating more resources than necessary to handle the expected peak workload efficiently.

In Agoda’s case, the reason was an inefficient distribution of the load across their Kafka consumers.

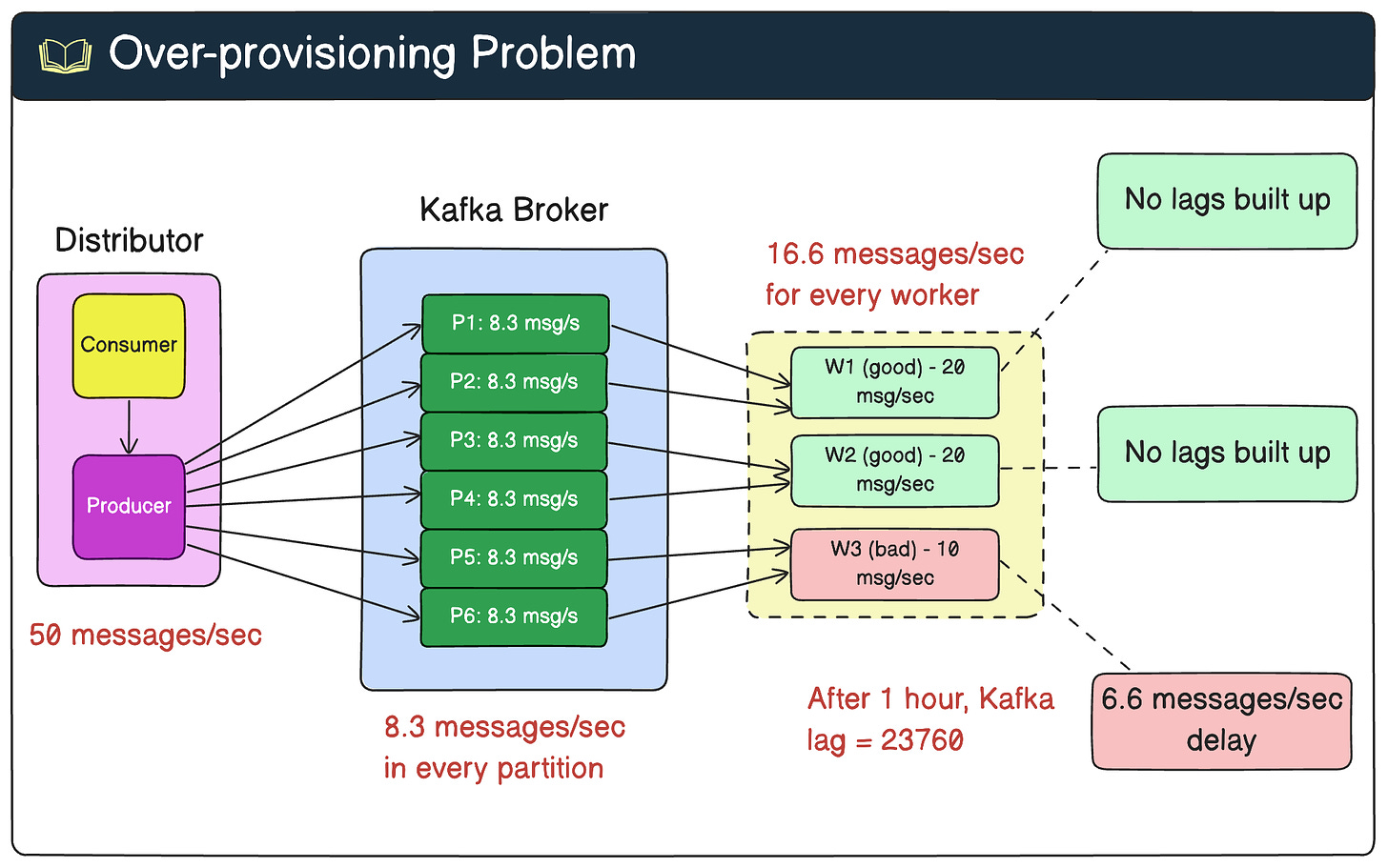

For example, consider an example where Agoda deploys Kafka consumers on heterogeneous hardware in their processor service. Suppose they have:

2 faster workers with a processing rate of 20 messages/second each.

1 slower worker with a processing rate of 10 messages/second.

In this setup, the total expected capacity would be 20 + 20 + 10 = 50 messages/second.

However, with a round-robin distribution, each worker would receive 1/3 of the overall messages regardless of processing speed. If the incoming traffic consistently hits 50 messages/second, here’s what would happen:

The 2 faster workers can easily handle their share of ~ 16.7 messages per second each.

The slower worker cannot keep up with its allocated ~16.7 messages per second, causing the lag to build up over time.

The diagram below shows this scenario:

To avoid high latency, Agoda would have to add extra resources to this setup to maintain processing SLAs. In this example, to process 50 messages/second, they would need to scale out to 5 machines. In other words, they end up over-provisioning by 2 extra machines due to the suboptimal distribution logic that doesn’t account for heterogeneous hardware.

Also, the same scenario can occur when the workload for each message is not identical. Either way, it results in:

Increased hardware costs

Wasted resources as some consumers sit idle while others are overburdened

More maintenance overhead

The Solution Agoda Didn’t Adopt

The first obvious solution to overcome the over-provisioning challenge was static balancing. There were mainly two approaches in this regard:

Deployment on identical pods

Weighted load balancing

Deployment on Identical Pods

This solution involves controlling the types of hardware used in service deployments. However, it had some drawbacks:

Maintaining a homogeneous hardware environment can be expensive, especially in a private cloud setup.

Upgrading all existing hardware simultaneously can be challenging.

Due to these reasons, it was not adopted.

Weighted Load Balancing

In this approach, varying weights are assigned to different consumers based on processing capacity. Consumers with higher weights are routed more traffic.

This solution works well when:

The capacity of consumers is predictable and remains static.

The workload of messages is uniform, making it easier to estimate machine capacity.

However, there were some challenges in implementing this in a real production environment at Agoda:

Messages often had non-uniform workloads, making capacity estimation difficult.

Dependencies like network and 3rd party connections were unstable, leading to fluctuating capacities.

Frequent addition of new features required extra maintenance to keep the weights updated.

Agoda’s Dynamic Lag-Aware Solution

Instead of the static rebalancing approaches, Agoda adopted a dynamic, lag-aware approach to solve the Kafka load balancing challenges. They implemented two main strategies:

Lag-aware Producer

Lag-aware Consumer

Lag-aware Producer

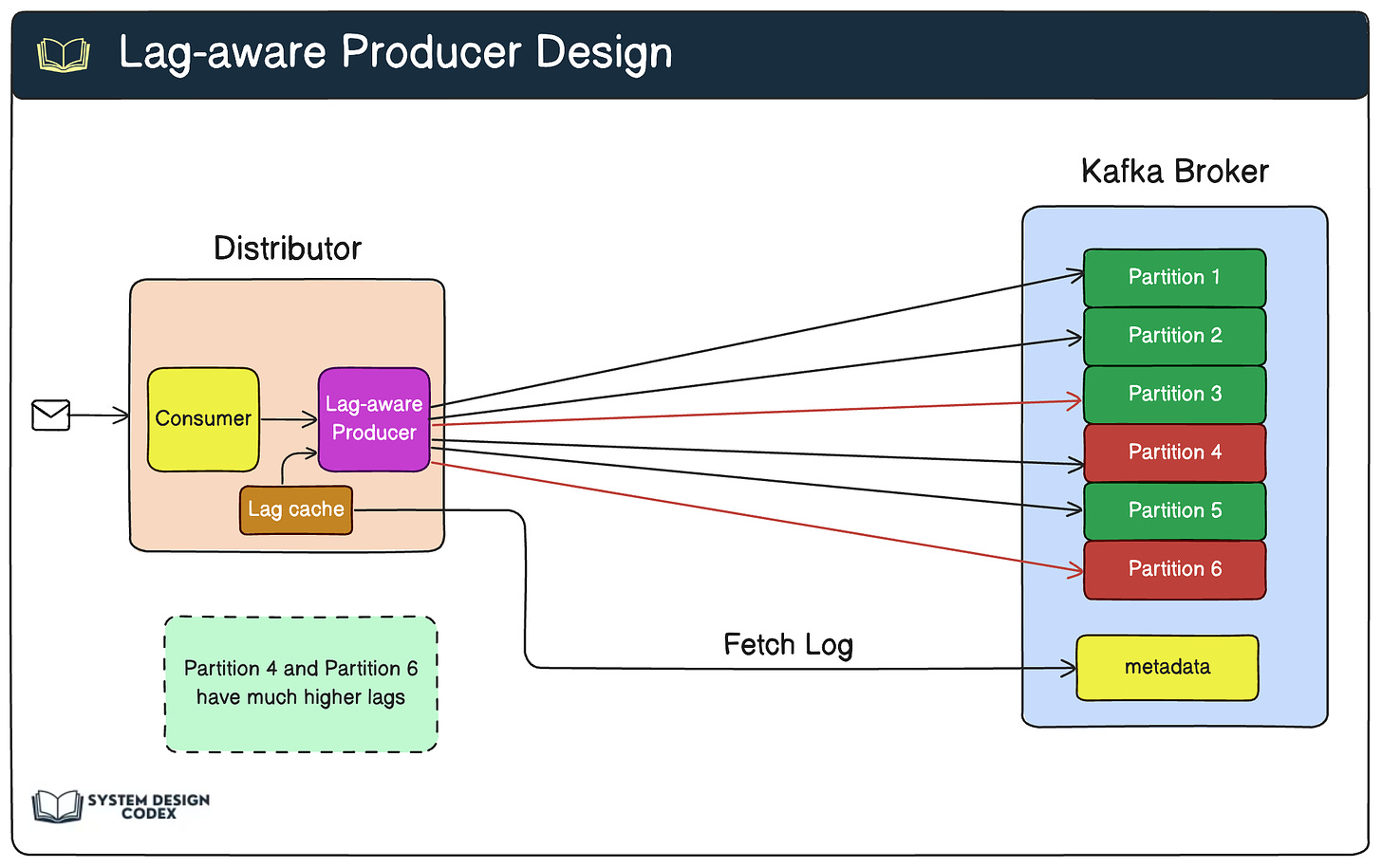

A lag-aware producer dynamically adjusts the partitioning of messages based on the current lag information of the target topic. Here’s how it works:

The producer maintains an internal cache of partition lags to reduce the number of calls to Kafka brokers.

Using the lag data, the producer applies a custom algorithm to publish fewer messages to partitions with high lag and more messages to partitions with low lag.

When lags are balanced and stable, this approach guarantees an even distribution of messages.

For example, let’s consider a scenario where Agoda’s supply system has an internal producer that publishes task messages to its processor. The system has 6 partitions with the following lags:

Partition 1 → 100 messages

Partition 2 → 120 messages

Partition 3 → 90 messages

Partition 4 → 300 messages

Partition 5 → 110 messages

Partition 6 → 280 messages

In this case, the lag-aware producer would publish fewer messages to partitions 4 and 6 due to their high lag while publishing more messages to the other partitions with lower lag.

The diagram below illustrates this.

Lag-aware Consumer

Lag-aware consumers are used when multiple consumer groups subscribe to the same topic. Here’s how they work:

In a downstream service like the Processor, a consumer instance experiencing high lag can proactively unsubscribe from the topic to trigger a rebalance.

During the rebalance, a customized Assigner redistributes the partitions across all consumer instances based on their current lag and processing capacity.

Kafka 2.4’s incremental cooperative rebalance protocol minimizes the performance impact of rebalancing, allowing for more frequent adjustments.

For example, consider a scenario where Agoda’s Processor service has 3 consumer instances consuming from 6 partitions:

Worker 1 → Partitions 1 and 2

Worker 2 → Partitions 3 and 4

Worker 3 → Partitions 5 and 6

If Worker 3 is running on slower hardware, it may experience higher lags in partitions 5 and 6. In this case, Worker 3 can proactively unsubscribe from the topic, triggering a rebalance. The custom Assigner then redistributes the partitions based on the current lag and processing capacity of each worker.

Algorithms for Lag-aware Producer

Coming back to the Lag-aware producer, Agoda used two main algorithms:

Same-Queue Length Algorithm

Outlier Detection Algorithm

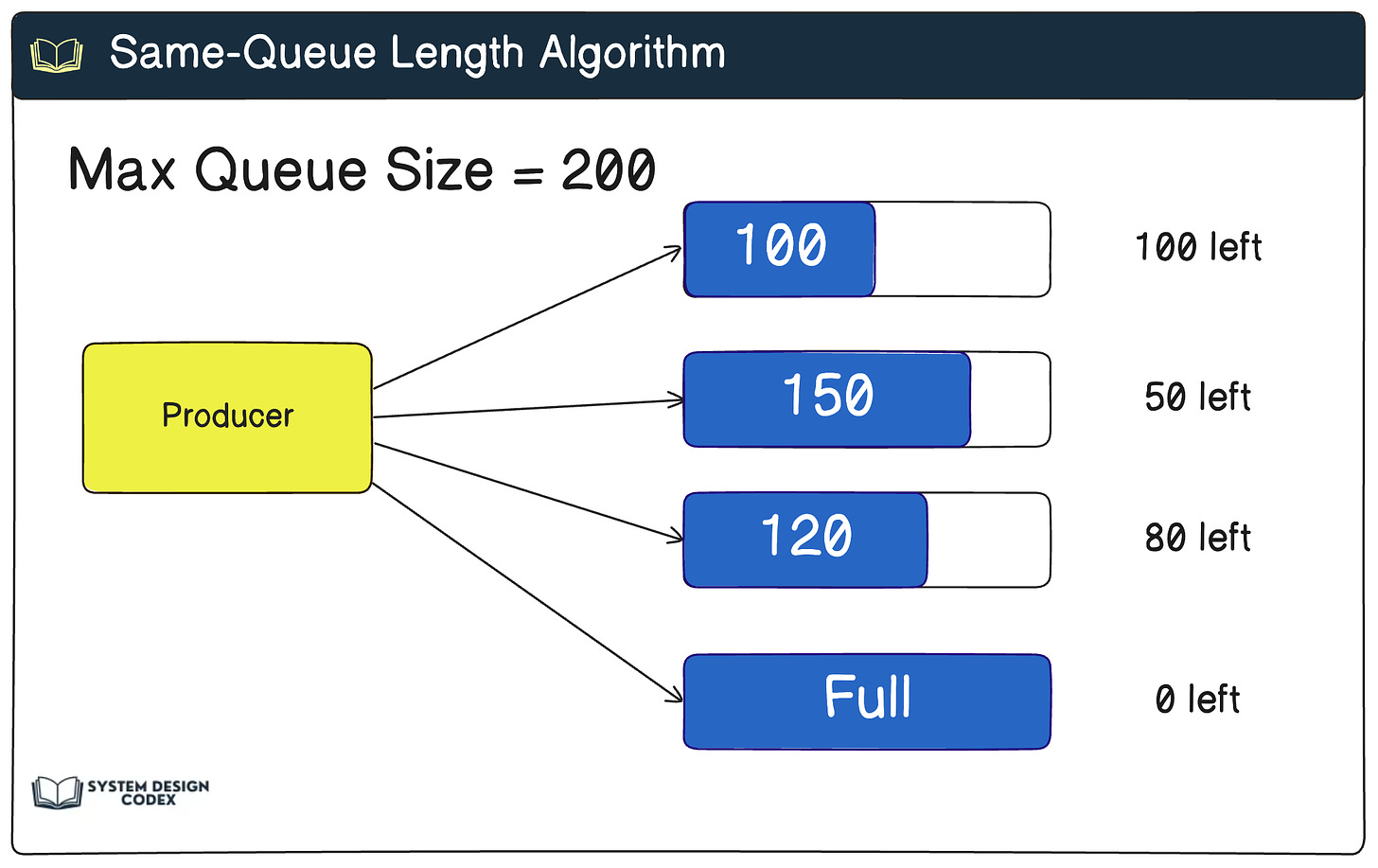

1 - Same-Queue Length Algorithm

The Same-Queue Length Algorithm aims to achieve equal queue lengths across all partitions by adjusting the number of messages published to each partition based on the current lag.

For example, consider a scenario with 4 partitions having the following lags:

Partition 1 → 100 messages

Partition 2 → 150 messages

Partition 3 → 120 messages

Partition 4 → 200 messages

The target queue length would be 200 (the maximum lag). The algorithm would then publish messages as follows:

Partition 1 → Publish 100 messages (200 - 100)

Partition 2 → Publish 50 messages (200-150)

Partition 3 → Publish 80 messages (200 - 120)

Partition 4 → Publish 0 messages (already at the target)

See the diagram below:

2 - Outlier Detection Algorithm

The Outlier Detection Algorithm identifies partitions with abnormally high lag (outliers) and temporarily stops publishing messages to those partitions to allow them to catch up.

It uses statistical methods like Interquartile Range (IQR) or Standard Deviation (STD) to identify outlier partitions.

For example, consider a scenario with 6 partitions having the following lags:

Partition 1 → 100 messages

Partition 2 → 120 messages

Partition 3 → 300 messages

Using the IQR method, partition 3 is identified as an outlier (slow partition). The algorithm would then:

Stop publishing messages to partition 3

Evenly distribute messages among partitions 1 and 2.

If partition 3 improves and is no longer an outlier, gradually resume publishing messages to them.

So - what do you think about Agoda’s load-balancing solution for Kafka? Would you have done something differently?

Reference:

How We Solve Load Balancing Challenges in Apache Kafka

Shoutout

Here are some interesting articles I’ve read recently:

Even Data Can't Escape Physics by

9 React Testing Best Practices for Better Design and Quality of Your Tests by

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues.

See you later with another edition — Saurabh

Nice explanation

Even with Kafka, you need a smart load-balancing solution in place.

Cool breakdown, Saurabh!

Thanks for the Shoutout.