SDC#7 - How Rate Limiting Works?

Notion's Journey to Sharding their DB and More...

Hello, this is Saurabh…👋

Welcome to the 77 new subscribers who have joined us since last week.

If you aren’t subscribed yet, join 700+ curious developers looking to expand their knowledge by subscribing to this newsletter.

In this issue, I cover the following topics:

🖥 System Design Concept → How Rate Limiting Works?

🧰 Case Study → Notion’s Journey to Sharding their Monolithic Database

🍔 Food For Thought → Should You Use Microservices?

So, let’s dive in.

🖥 How Rate Limiting Works?

Rate limiting is the concept of controlling the amount of traffic being sent to a resource.

How can you achieve this control?

By using a rate limiter – a component that lets you control the rate of network traffic to an API server or any other application that you want to protect.

Here’s a big-picture view of how a rate limiter sits in the context of a system.

For example, if a client sends 3 requests to a server but the server can only handle 2 requests, you can use a rate limiter to restrict the extra request from reaching the server.

Key Concepts of Rate Limiting

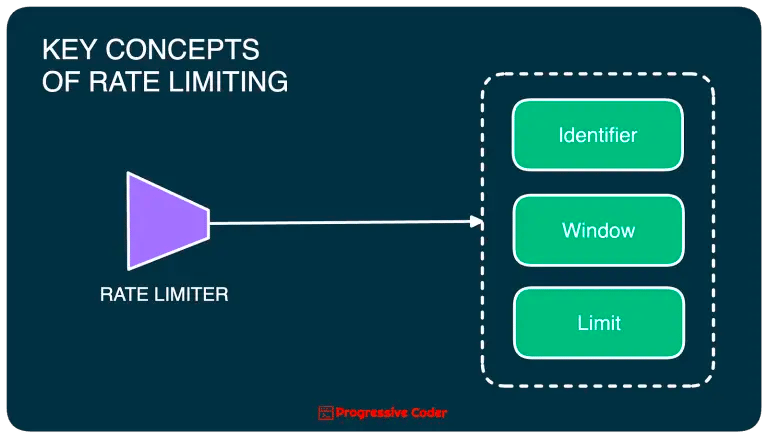

There are 3 key concepts shared by most rate-limiting setups.

Here’s a quick look at each of them:

Limit — Defines the maximum number of requests allowed by the system in a given time span. For example, Twitter (now X) recently rate-limited unverified users to only view 600 tweets per day.

Window — The time period for the limit. It can be anything from seconds to minutes to even days.

Identifier — A unique attribute that lets you distinguish between individual request owners. For example, things like a User ID or IP address can play the role of an identifier.

Designing a Rate Limiter

The basic premise of a rate limiter is quite simple.

On a high level, you count the number of requests sent by a particular user, an IP address or even a geographic location. If the count exceeds the allowable limit, you disallow the request.

However, there are several considerations to be made while designing a rate limiter.

For example:

Where should you store the counters?

What about the rules of rate limiting?

How to respond to disallowed requests?

How to ensure that changes in rules are applied?

How to make sure that rate limiting doesn’t degrade the overall performance of the application?

To balance all of these considerations, you need several pieces that work in combination with each other.

Here’s an illustration that depicts such a system.

Let’s understand what’s going on over here:

When a request comes to the API server, it first goes to the rate limiter component. The rate limiter checks the rules in the rules engine.

The rate limiter proceeds to check the rate-limiting data stored in the cache. This data basically tells how many requests have already been served for a particular user or IP address. The reason for using a cache is to achieve high throughput and low latency.

If the request falls within the acceptable threshold, the rate limiter allows it to go to the API server.

If the request exceeds the limit, the rate limiter disallows the request and informs the client or the user that they have been rate-limited. A common way is to return HTTP status code 429 (too many requests).

There are a couple of improvements you can have over here:

First, instead of returning HTTP status code 429, you can also simply drop a request silently. This is a useful trick to fool an attacker into thinking that the request has been accepted even when the rate limiter has actually dropped the request completely.

Second, you could also have a cache in front of the rules engine to increase the performance. In case of updates to the rules, you could have a background worker process updating the cache with the latest set of rules.

🧰 Notion’s Journey to Sharding their Monolithic Database

In mid-2020, Notion came under an existential threat.

Their Monolithic PostgreSQL DB faced a catastrophic situation.

The same DB had served them for 5 years & 4 orders of magnitude growth.

But it was NO longer sufficient.

The first signs of trouble started with:

Frequent CPU spikes in the database nodes

Migrations became unsafe and uncertain

Too many on-call requests for engineers

The monolithic database was struggling to cope with Notion’s tremendous growth.

Though growth is a happy problem for a product to have, things soon turned ugly.

The inflection point was the start of two major problems:

The Postgres VACUUM process began stalling. This meant no database cleanup and no reclaiming of disk space.

TXID Wraparound. It’s like running out of page numbers for a never-ending book. This was an existential threat to the product.

To get around these problems, Notion decided to shard its monolithic database.

But why sharding?

Vertical scaling i.e. getting a bigger instance wasn’t viable at the scale of Notion. It simply wasn’t cost-effective.

With sharding, you can scale horizontally by spinning up additional hosts.

To retain more control over the distribution of data, Notion decided to go for application-level sharding. In other words, no reliance on 3rd party tools.

Some important design decisions they had to make:

What data to shard?

How to partition the data?

How many shards to have?

Each decision was crucial and knowing more about them offers some great insight for us.

Decision# 1 - What Data to Shard?

Notion’s data model is based on the concept of Block. We talked about this in detail in an earlier edition.

<Link> to Notion Flexible Data Model

To simplify things, one Block is equivalent to a row in the database.

Therefore, the Block table was the highest priority candidate for sharding. But the Block table depends on other tables like Space and Discussion.

That’s why a decision was made to shard all tables transitively related to the Block table.

Decision# 2 - How to Partition the Data?

A good partition scheme is mandatory for efficient sharding.

Notion is a team-based product where each Block belongs to one Workspace.

Therefore, the decision was to use the Workspace ID (UUID) as the partitioning key. This was to avoid cross-shard joins when fetching data for a particular workspace.

See the below illustration.

Decision# 3 - How many Shards?

This was an important decision as it would have a direct impact on the overall scalability of the product.

The goal was to handle existing data and also meet a 2-year usage projection.

Ultimately, this is what happened:

480 logical shards were distributed across 32 physical databases

15 logical shards per database

500 GB upper bound per table

10 TB per physical database

Here’s the infographic from the Notion Engineering Blog.

After these major decisions, the data migration process started.

Notion adopted a 4-step framework to migrate the data from the monolithic database to the new setup.

STEP 1 - Double-Write: New writes get applied to both old and new databases.

STEP 2 - Backfill: Migrate old data to new database.

STEP 3 - Verification: Ensure data integrity.

STEP 4 - Switch-Over: Switch over to the new database.

The below illustration shows the entire migration process:

There were a lot of valuable lessons that the engineering team at Notion learned from this project:

Shard earlier: Premature optimization is bad. But waiting too long before sharding also creates constraints.

Aim for a Zero-Downtime Migration: This results in a good user experience.

Use a Combined Primary Key: Down the line, the Notion team had to move to a composite key.

P.S. This post is inspired by the explanation provided on the Notion Engineering Blog. You can find the original article over here.

🍔 Food For Thought

👉 Should you use Microservices?

I’ve been asked what are the advantages of dividing a monolithic backend service into multiple services (aka microservices).

My honest opinion is that in 90% of the cases, you don’t need microservices. In fact, jumping directly to microservices can also be a disaster.

That’s because the benefits of microservices outweigh the hassle of using them only when you are dealing with a significant amount of scale.

What you definitely need is a Modular Monolith rather than a tightly coupled monolithic application. Even companies operating at the scale of Shopify are doing well with a Modular Monolith.

But of course, microservices do have their place when you need features like:

On-demand scalability of individual parts of your system

High-Availability and Fault Tolerance

Rapid deployment & faster delivery cycles

However, to implement microservices in a way that creates a strategic advantage, you must follow some best practices.

Here’s a post I made a few days back that summarizes the most important practices.

Link to the post below:

https://x.com/ProgressiveCod2/status/1700035282458886245?s=20

👉 How To Stand Out When Applying for a Job?

Getting interview calls in the current job market is tough.

Here’s an excellent tip by Fernando that can help you stand out from the crowd.

Link to the post below:

https://x.com/Franc0Fernand0/status/1701827800187961649?s=20

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues

See you later with another value-packed edition — Saurabh.