How HTTP 2 Improves Upon HTTP 1

Top Features of HTTP 2

HTTP/1.1 has been the backbone of the web for decades. However, the demands of modern web applications have grown significantly.

HTTP/2, the next-generation protocol, was designed to address these challenges and significantly improve web performance. These improvements come from key features that optimize how data is transmitted between clients and servers.

Let’s explore these features in detail.

1 - Binary Framing Layer

HTTP/2 moves away from the text-based communication of HTTP/1.1 and adopts a binary framing layer. Instead of exchanging data as plain text, HTTP/2 encodes messages into a compact binary format.

How It Works:

HTTP/2 splits messages into smaller units called frames.

Each frame is tagged with identifiers, allowing the client and server to assemble them back into coherent requests or responses.

These frames are sent over a single TCP connection.

Why It’s Faster:

Binary encoding is more compact than text, reducing the size of messages and speeding up transmission.

Splitting messages into frames allows them to be interleaved with other messages, eliminating the head-of-line blocking that often slowed down HTTP/1.1.

Example Use Case:

Imagine loading a web page with multiple resources like images, scripts, and stylesheets. HTTP/2 sends these resources as binary frames over a single connection, reducing overhead and speeding up the page load.

2 - Multiplexing

Multiplexing is one of the cornerstone features of HTTP/2. It allows multiple requests and responses to be sent simultaneously over a single connection.

How It Works:

Frames from different streams (requests/responses) are interleaved during transmission.

Each frame carries an identifier that helps the recipient reconstruct the original message.

Why It’s Faster:

In HTTP/1.1, browsers limit the number of simultaneous connections to a single domain, often resulting in bottlenecks. Multiplexing removes this limitation by using a single connection.

Interleaving frames ensures that one slow resource doesn’t block others, preventing the delays caused by HTTP/1.1’s sequential processing.

Example Use Case:

When a client requests multiple assets from a server, HTTP/2 multiplexes these requests. As a result, the client doesn’t have to wait for one request to complete before starting another, significantly improving loading times for resource-heavy web pages.

3 - Stream Prioritization

Stream prioritization in HTTP/2 allows developers to assign different levels of importance to streams (requests or responses). This ensures that critical resources are delivered faster.

How It Works:

Each stream can have a priority weight (a value between 1 and 256) and a dependency on another stream.

Servers can use this information to allocate resources and bandwidth more efficiently.

Why It’s Faster:

Developers can prioritize essential resources like HTML and CSS over less critical assets like background images or analytics scripts.

Ensures the most important content is rendered first, enhancing the user experience.

Example Use Case:

For a video streaming platform, stream prioritization ensures that the video playback stream gets higher priority over other streams like metadata or thumbnails, ensuring smooth playback.

4 - Server Push

HTTP/2 introduces server push, which allows servers to proactively send resources to the client without waiting for explicit requests.

How It Works:

When a client requests a web page, the server can "push" additional resources (e.g., stylesheets or JavaScript files) that it predicts the client will need.

These resources are sent along with the original response, reducing the number of round trips.

Why It’s Faster:

Eliminates the latency caused by waiting for the client to analyze the HTML and then request additional resources.

Reduces the overall time to fully load a web page.

Example Use Case:

When a client requests an HTML page, the server can push linked CSS and JavaScript files. The client receives everything it needs in one go, reducing delays.

5 - HPACK Header Compression

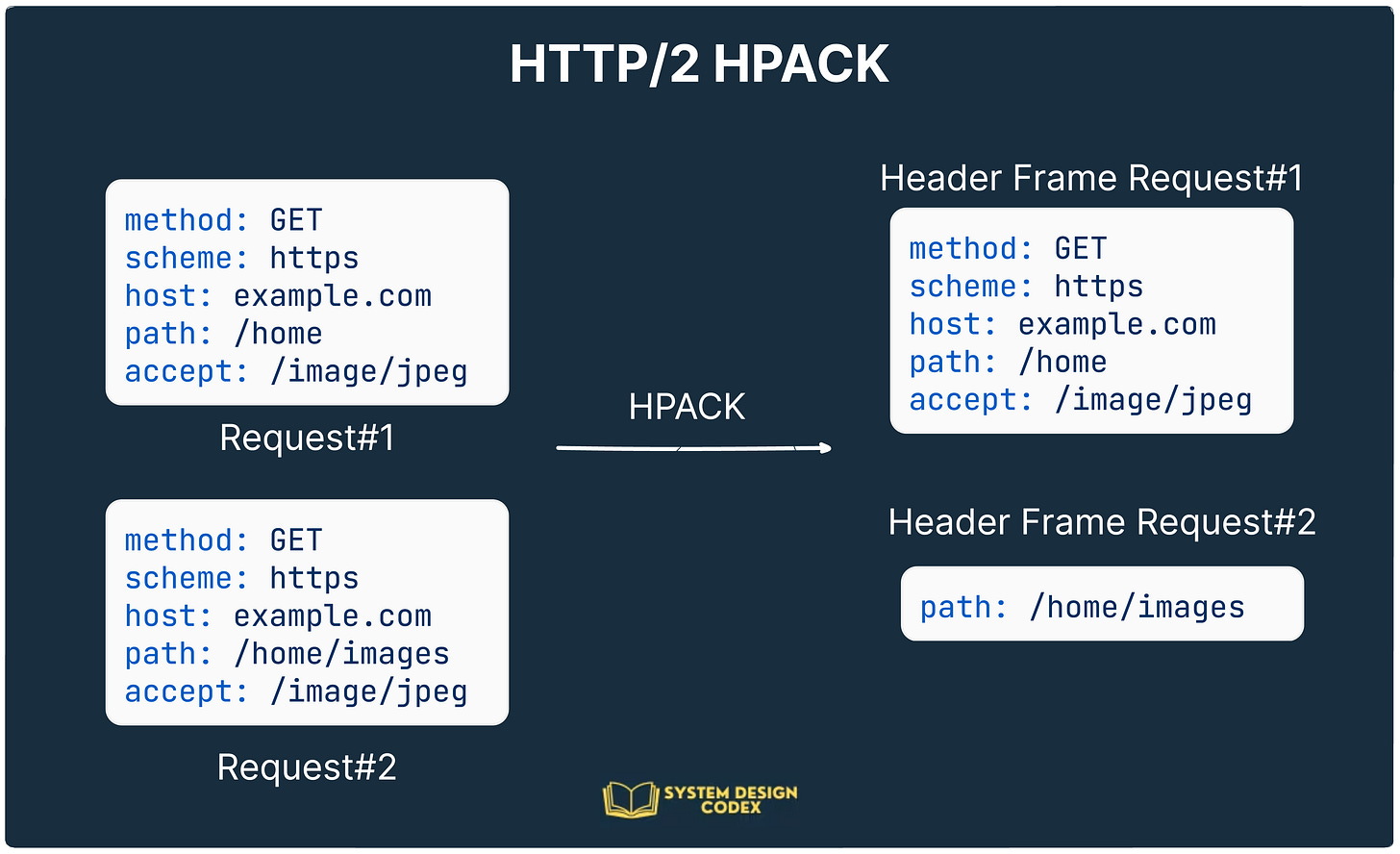

HTTP/1.1 was inefficient with headers, as each request sent the full header information, even if it was redundant across multiple requests. HTTP/2 addresses this with HPACK, a specialized header compression algorithm.

How It Works:

HTTP/2 uses a dynamic table to store header fields that are reused across multiple requests and responses.

Only new or changed headers are sent, significantly reducing redundancy.

Why It’s Faster:

Compressing headers saves bandwidth, especially for applications that make frequent requests with similar headers (e.g., APIs).

Reduces the size of transmitted data, speeding up communication.

Example Use Case:

Consider a single-page application that makes many API calls with identical headers (e.g., authentication tokens). HPACK ensures that only the unique data is sent for each request, improving overall performance.

👉 So - which other HTTP/2 have you come across?

Also, now we have HTTP/3, which follows a completely different approach than HTTP/1 and HTTP/2. But more on that in another post.

Shoutout

Here are some interesting articles I’ve read recently:

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues.

Thanks for the mention, Saurabh! I’m curious how people make HTTP2 available for us. How do we know if we’re already making some HTTP2/3 requests? Will this be an implementation detail on the client level (curl or a web browser) that we don’t have to care about?

it is very clear and informative , thank Saurabh