SDC#27 - Facebook's Memcache Breakdown

Deep dive into handling billions of requests & trillions of data items...

Hello, this is Saurabh…👋

Welcome to the 761 new subscribers who have joined us since last week.

If you aren’t subscribed yet, join 6000+ curious Software Developers looking to expand their system design knowledge by subscribing to this newsletter.

In this edition, I cover one detailed case study.

🧰 Facebook’s Memcache Breakdown

So, let’s dive in.

🧰 Facebook’s Memcache Breakdown

A few days ago, I was watching the movie Social Network and there was this intense scene where Mark Zuckerberg confronts his co-founder Eduardo Saverin for freezing the server payments.

Here’s what Zuckerberg said:

Even a few people leaving would reverberate through the entire user base.

The users are interconnected, that is the whole point.

College kids are online because their friends are online, and if one domino goes, the other dominos go, don't you get that?

No matter what you may think about the movie as a whole, the above statement by Zuckerberg’s character was spot on.

A social network cannot go down. Period.

When I first used Facebook, it almost seemed like a miracle.

Everything felt so snappy.

The way your timeline got populated with interesting stuff, the way the like button felt so intuitive and how the notifications made you feel excited.

There was nothing like it at the time.

As a user, it was a fascinating experience and there was an inherent joy in just spending time on Facebook. But over the years, curiosity has taken over.

The curiosity of how things work under the hood!

Facebook’s paper on Scaling Memcache provides a great sneak peak into the inner workings of the world’s most popular social network. Though it was published many years ago, the learnings from the paper are still relevant for software engineers at all levels.

This is my attempt to break down Facebook’s memcache adoption in a fun and interesting manner.

But first off, let’s understand why Facebook needed something like Memcache.

The main reason was popularity. At any given point of time, millions of people were accessing Facebook from all over the world.

What does this mean in computing terms?

Facebook needed almost real-time communication

On-the-fly content aggregation

Access and update super popular shared content

Scale to handle billions of user requests per second

Store trillions of items across multiple geographic locations

To support the vision, Facebook took up the open source version of Memcached and enhanced it to build a distributed key-value store.

This enhanced version was known as Memcache.

What is Memcached?

Memcached is an in-memory key-value store.

It provides a simple set of operations:

set

get

delete

As mentioned earlier, the open-source version provided a single machine in-memory hash table. Facebook took this version as a basic building block and created a distributed key-value store known as Memcache.

So - “Memcached” is the source code or a running binary and “Memcache” stands for the distributed system

There were two main ways in which Facebook used Memcache:

Query Cache

This was to reduce the read load on the databases.

Facebook uses Memcache as a demand-filled look aside cache.

“Demand filled” because the data gets into the cache only when it’s requested.

“Look aside” because they first check the cache and go to the database only in case of a miss.

The below diagram shows what a demand-filled look-aside cache looks like:

The Write Path is pretty interesting over here.

After updating the database for a particular key, they don’t update the cache. Instead, they delete the data for that key from the cache (also known as invalidation).

A key takeaway from this is that Memcache is not the authoritative source of data. The authoritative source is still the database (MySQL in this case).

Generic Cache

Facebook also uses Memcache as a more general purpose key-value store.

Teams can store pre-computed results from ML algorithms into Memcache. These results can then be accessed by other applications when needed.

Since most of the infrastructure is already in place, it takes very little work for other services to use Memcache.

The Overall Architecture

The overall architecture of Facebook (at the time of writing the paper) consisted of three major parts:

Region - These are basically global locations where Facebook servers are located. There is a Primary region and multiple Secondary regions. Each region consists of multiple frontend clusters and only one storage cluster.

Frontend Cluster - Consists of multiple web servers and memcache servers.

Storage Cluster - This contains the source-of-truth database that holds the authoritative copy of every data item.

The below diagram shows the high-level view of the architecture:

There were a couple of important design goals for this architecture:

A change must impact a user facing or operational issue. Optimizations that have limited benefit are rarely considered.

Willingness to expose slightly stale data instead of allowing excessive load on the backend. Ultimately, they don’t want to risk availability. Remember the “dominoes going down” statement by Zuckerberg.

With this in mind, of course, there were multiple challenges that Facebook had to solve at various levels:

Challenge#1 - Managing latency, load and failures within a cluster

Challenge#2 - Managing replication of data within a region’s boundaries

Challenge#3 - Managing consistency of data across regions

Let’s look at each challenge and how Facebook solves it with their Memcache implementation.

Intra-Cluster Challenges

As we saw earlier, every cluster consists of webservers and Memcache servers.

At the cluster-level, the focus was on three important goals:

Reducing latency

Reducing load on the database

Handling failures

Reducing Latency

Every frontend cluster has hundreds of Memcached servers and items are distributed across these servers using Consistent Hashing.

I wrote a small introduction to Consistent Hashing in my earlier post. You can check it out below:

At their scale, a single web request can result in 100s of fetch requests for the data on Memcache. For example, loading a popular page with a ton of posts and comments and so on.

In other words, one request means webservers must communicate with many Memcached servers in a short period of time. And mind you, this is applicable for both cache hit and cache miss scenarios.

Therefore, a single server can become a bottleneck for many webservers resulting in high latency.

Facebook uses a couple of important tricks to reduce latency.

👉 Parallel Requests and Batching

Imagine ordering multiple items at a restaurant and the waiter going back and forth multiple times to fetch those items. It’s going to take longer when compared to the waiter bringing everything together.

The same optimization is done over here.

They construct a DAG (Directed Acyclic Graph) to represent dependencies between the data. The webserver uses the DAG to maximize the number of items that can be fetched concurrently. For example, fetching the comments of a post when fetching a post.

👉 Using UDP instead of TCP

Clients use UDP for get() requests to reduce latency and overhead.

Why UDP?

Because UDP is connectionless and each thread in the webserver is allowed to directly communicate with the memcached servers.

But UDP can drop packets!

Yes, of course. This is handled by detecting packets that are dropped or out of order and treating them as a cache miss on the client side.

“What about set() and delete() operations?” - you may ask.

Those are still managed with TCP. However, these requests go through a special proxy known as mcrouter that runs on the same machine as the webserver. The mcrouter is like the middleman that performs a bunch of duties such as serialization of data, compression, request routing, batching and error handling.

“Why not UDP for set() and delete() as well?” - you may ask.

TCP makes the operations more reliable and removes the need for adding a retry mechanism. This is important in the case of update and delete.

Reducing Load

What’s the number one goal for Memcached?

It’s to reduce the frequency of fetching data from the database.

Basically, reduce the load on the database. That’s because database queries are expensive operations.

Using a look-aside cache solves this problem to a great extent. However, at Facebook’s scale, a couple of important problems can happen quite easily.

Stale sets

Thundering herds

The stale set situation happens when outdated data is set in the cache and there’s no easy way of invalidating it.

The thundering herd problem occurs in a highly concurrent environment where a cache miss triggers a thundering herd of requests to the database.

Here’s a quick diagram to visualize these issues:

I also talked about them in more detail in the previous edition (3rd topic).

Anyways, it was important for Facebook to minimize the probability of these two critical issues.

And to achieve this, they used a technique known as leasing.

Leasing helped solve both stale sets and thundering herds issue.

So - what’s the deal with leasing?

Let’s say a client requests Memcache for a particular key and it’s a cache miss.

Now, when there’s a cache miss, it’s the client’s responsibility to fetch the data from the database and update Memcache so that future requests for the same key don’t get a cache miss.

But what if other clients are also getting the same cache miss?

You suddenly might have many responsible clients trying to update Memcache.

With leasing in place, the Memcache instance gives a lease to a particular client to set data into the cache when there’s a cache miss. A lease is just a 64-bit token that’s bound to the specific key that the client requested.

The client has to provide the token when setting the value in the cache. With this token, Memcached can verify whether the data should be stored or not and thus deal with concurrent writes.

See below diagram that explains the concept:

Also, verification can fail if Memcached has already invalidated the lease token due to receiving a delete request for that item, thereby preventing the stale set scenario.

This takes care of stale sets.

“What about thundering herds?” - you may ask.

Well - a slight modification to the leasing approach also helps solve the thundering herd issue.

Memcached server regulates the rate at which it returns the leasing tokens (for example, returning a token once every 10 seconds per key).

If any request for the key’s value comes within - let’s say 10 seconds - of a lease token being issued, Memcached sends a special response asking the client to wait for a short period of time.

The idea behind this is that the client holding the lease token would soon update the cache successfully. When the waiting clients retry, the data is most likely present in the cache.

The million dollar question is - “Did these techniques really help Facebook reduce the load?”

As per their measurements, the leasing approach alone helped reduce peak DB query rates from 17K/seconds to 1.3K/seconds.

Handling Failures

When do failures occur in Facebook’s typical scenario?

If clients aren’t able to fetch data from Memcache, it results in excessive load on the backend servers resulting in cascading failures.

There are two scales to this problem:

A small number of hosts becoming inaccessible due to network failure

Widespread outage (such as a cluster going down) that affects a big percentage of hosts

For dealing with a widespread outage, Facebook diverts web requests to other clusters thereby removing the load from the problematic cluster.

For small outages, they rely on an automated remediation system. However, it takes time to kick in. To insulate the backend services during this time, Facebook dedicates a set of machines (named Gutter) to take over responsibilities.

Typically, Gutter machines account for 1% of the Memcached servers within a cluster.

“But why Gutter machines?” - you may ask. “Why can’t they just re-distribute the keys among the remaining servers?”

While rehashing keys among remaining servers is a common technique, the risk of cascading failures was too great for Facebook. This was primarily due to high chances of non-uniform key access frequency.

For instance, a single key can account for almost 20% of the requests to a server and the server that becomes responsible for this hot key can also get overloaded.

The Gutter approach mitigates this risk.

But how are these Gutter machines even used?

When a Memcached client receives no response (i.e. not even a cache miss), the client assumes that the server has failed and issues a request to the Gutter pool.

If the request to the Gutter returns a cache miss, the client inserts the data into the Gutter machine after querying the database.

Gutter entries expire quickly in order to remove the need for invalidations.

The below diagram illustrates this:

Backend is protected even though there might be some stale data that gets served. But this is an acceptable trade-off for them. Remember, this was one of the design goals that stale data is okay in certain scenarios.

Intra-Region Challenge

The biggest intra-region challenge Facebook had to solve is around handling invalidations across multiple frontend clusters.

As we discussed earlier, one region at Facebook consists of multiple frontend clusters but only one storage cluster. And each frontend cluster consists of multiple webservers and Memcached servers.

Also, the storage cluster (the MySQL database) holds the authoritative copy of each data item.

Users may connect to different frontend cluster when requesting for data depending on how those requests are load-balanced. This ends up caching the data in multiple clusters.

So, you may have a situation where a particular key is cached in the Memcached servers of multiple clusters within the region.

See the below diagram that tries to depict this situation from the perspective of a region:

For example, the key “foo” and “bar” are present in multiple frontend clusters within a region.

How do you invalidate this data across all the clusters of a region in case of any update?

Cluster-Level

Any webserver in a particular cluster that modifies the data is responsible for invalidating the data within its own cluster.

This provides read-after-write consistency for the user that made the request. It also reduces the time that stale data exists within the cluster after the data has been updated.

I wrote about read-after-write consistency in an earlier post about database replication problems. You can check it out if interested:

Region-Level

For region-level invalidation, the task of invalidation isn’t given to the webserver but to the storage cluster itself.

How does that work?

By means of an invalidation pipeline.

An invalidation daemon named

mcsquealruns on every database server within the storage cluster.It inspects the commit log, extracts any deletes and broadcasts them to the memcache deployments in every frontend cluster within the region

Basically,

mcsquealbatches these deletes into fewer packets and sends them to dedicated servers runningmcrouterinstances in each cluster.The

mcrouterinstance unpacks individual deletes and routes them to the correct Memcached server located within the frontend cluster.

The below diagram shows this setup in more detail.

Across-Region Challenges

Till now, we were discussing things mainly at a region level.

However, operating at the scale of Facebook requires data centers to be located globally.

Facebook found several advantages to a broader geographic placement of data.

First, putting web servers closer to the end users help reduce latency

Second, geographic diversity can mitigate the impact of events such as natural disasters and power failures.

And third, new locations can also provide cheaper power and economic incentives.

Ultimately, no matter how deep your pockets are, cost plays a huge role in many software decisions.

However, expanding to multiple regions also opens up its own challenges.

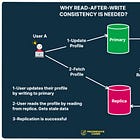

The biggest challenge is around maintaining consistency between data in Memcached and the persistent storage across the regions.

In Facebook’s setup, one region holds the primary databases while other geographic regions contain only read-only replicas. The replicas are kept up-to-date with the primary using MySQL’s replication mechanism.

But whenever replication is involved, there is going to be some replication lag. In other words, the replica databases can lag behind the primary database.

There are two parts to the entire game.

Writes from the Primary Region

Consider a webserver in the primary region (let’s say its US) that receives a user’s request to update their profile picture.

This change needs to be propagated to other regions as well to maintain consistency

Basically, we need to do two things:

The replica database has to be updated.

Also, the cache in the secondary regions need to be invalidated.

The trick is to manage the invalidation along with the replication.

Why?

If the invalidation arrives in the secondary region (let’s say Europe) before the actual change has been replicated to the database in that region, you can have a race condition.

Here’s how:

Someone in Europe region tries to view the profile picture

The system fetches the information from the cache. But the cache has been invalidated.

It then goes to the replica database in the region which is still lagging. Therefore, the fetch request gets the old picture and sets it within the cache.

Later on, the replication is successful.

However, the cache is now set with stale data and subsequent requests will continue fetching this stale data.

The below sequence diagram explains the complete scenario:

So - how does Facebook handle this scenario?

To avoid such race conditions, Facebook made sure that the storage cluster that has the most up-to-date information is responsible for sending invalidations within a region. It uses the same mcsqueal setup we saw in the previous section.

This ensures that invalidations don’t get sent prematurely to the replica regions before the change has been fully replicated.

Writes from the Replica Region

When dealing with writes from a non-primary region, things change.

Here’s the sequence of events:

User updates their profile picture from a secondary region. While reads are served from the replica or secondary region but the writes go to the primary region.

After the write is successfully made to the primary region’s database, it also need to be replicated to the secondary regions as well.

However, there’s a risk that before the replication catches up, a read request on the replica region may fetch and cache stale data.

So - how did Facebook handle this scenario?

They used the concept of a remote marker.

Basically, the remote marker indicates that the data in the local replica is potentially stale and the query should be redirected to the primary region instead.

Here’s how it works:

When a webserver wishes to update the data for key K, it sets a remote marker R in the replica region.

Then, it performs the write to the primary region.

Also, the key K is deleted from the replica region’s Memcached servers.

A read request comes along for K in the replica region but the webserver won’t be able to find the key in the cache.

It checks whether remote marker R exists. If yes, the query is directed to the primary region.

The below diagram tries to show all the steps:

“What about the latency?” - you may ask.

Isn’t it inefficient to first check cache, then check the remote marker and then direct the query to the primary region?

Of course, it is.

But, in this particular scenario, Facebook chose to trade-off latency in case of a cache miss in exchange for a decreased probability of reading stale data.

Single Server Optimizations

Apart from the bigger architectural approaches we discussed, Facebook also spent a significant time improving the performance of a single Memcached server.

A few important optimizations they made were as follows:

Automatic expansion of the hash table to avoid look-up time going to O(n).

Make the server multi-threaded

Giving each thread its own UDP port to reduce contention when sending replies.

Organizing memory using an Adaptive Slab Allocator

Since each server is part of the whole, these improvements led to efficiency gains across the cluster and region levels.

Conclusion

All in all, Facebook’s Memcached implementation offers an excellent sneak peek into the challenges of building a social network and dealing with large volumes of data.

It also offers a great look at the multiple challenges with caching.

In case you have any observations or views about this, it would be great if you leave a comment.

The main reference for this post comes form the original research paper published by Facebook. However, a lot of the gaps in explanation have been filled after looking into the various other topics.

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues

See you next week with another value-packed edition — Saurabh

This was a fantastic article! Really cool to see not just how Facebook handles caching, but handles caching in a way that is distributed across the world. I especially liked how you talked about the tradeoffs they considered with latency versus stale data.

Comprehensive breakdown of Facebook's Memcache! What I love about these well-researched issue is that I often learn the names of the systems/algorithms I already implemented. For example, I had no idea these are called a ”demand-filled look-aside cache”, but I definitely built them! Thanks Saurabh!