Cache Eviction Strategies

Choose the right one for your application

Caching is a crucial technique for improving application performance and responsiveness. By storing frequently accessed data in memory, caching reduces database load and minimizes latency, leading to faster application responses.

However, cache memory is limited, and when it reaches capacity, some data must be removed to make space for new entries.

This process is known as cache eviction.

Choosing the right cache eviction strategy can significantly impact performance, memory efficiency, and hit rates. Let’s look at the most popular strategies.

1 - Time-to-Live (TTL)

How TTL Works

Each cached item is assigned a fixed expiration time.

Once the time period expires, the item is automatically removed, regardless of how frequently it is accessed.

Best Use Cases

Session Management: User login sessions can expire after a set time.

Cache Invalidation for APIs: TTL ensures data freshness by automatically removing outdated responses.

DNS Caching: DNS records use TTL to update host-to-IP mappings periodically.

Pros

✔ Simple to implement—no need to track access frequency.

✔ Ensures automatic cache refresh for time-sensitive data.

Cons

✖ Premature eviction—Frequently accessed data may expire before it's needed again.

✖ Wasted memory—Unused data stays in cache until TTL expires, even if it's never accessed.

Example

# Setting TTL in Redis (Item expires in 30 seconds)

SET user:123 "John Doe"

EXPIRE user:123 30In Redis, the EXPIRE command ensures the key is removed after 30 seconds.

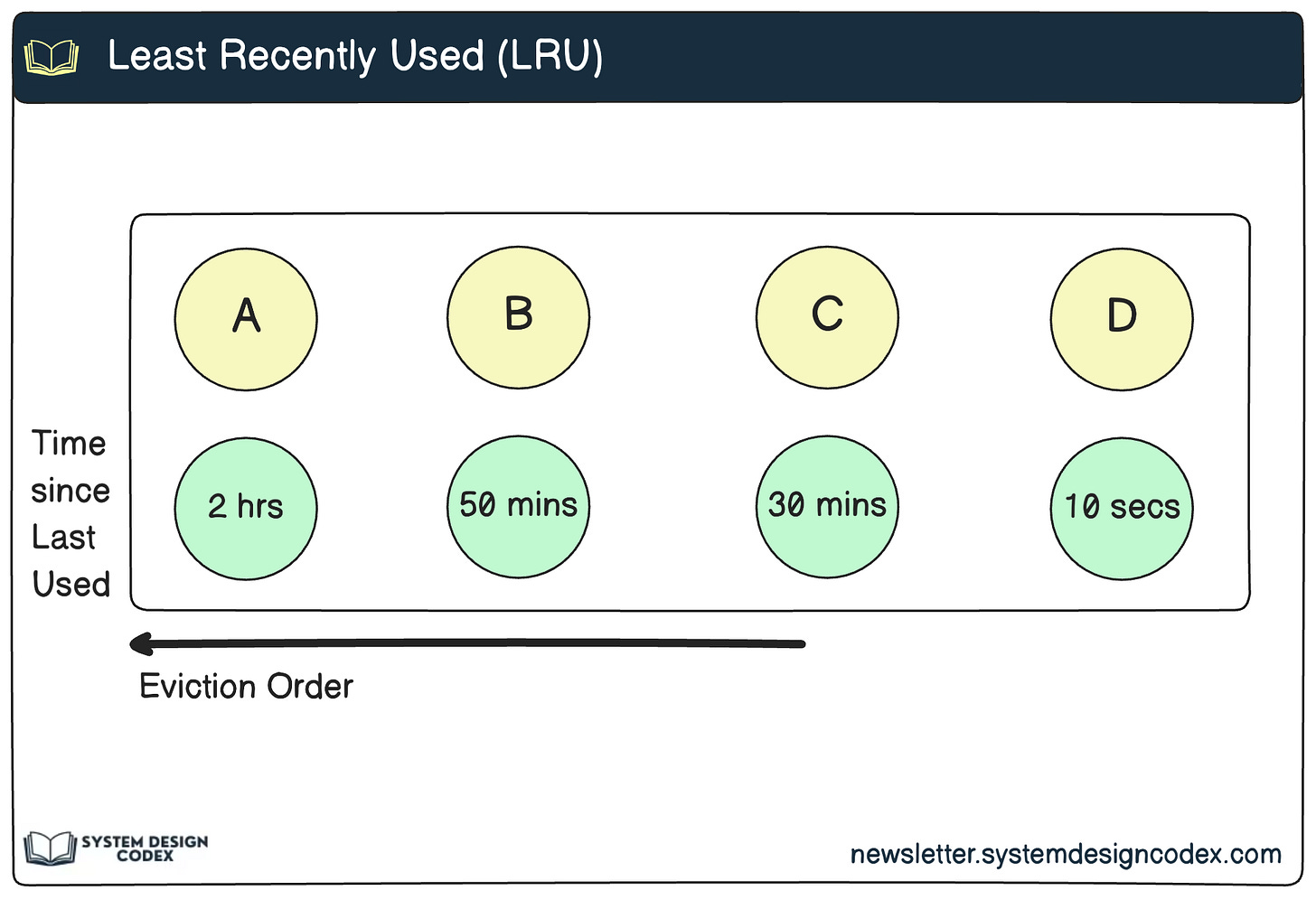

2 - Least-Recently Used (LRU)

How LRU Works

When the cache is full, the least recently accessed item is evicted first.

LRU assumes that recently accessed data is more likely to be accessed again soon (temporal locality).

Best Use Cases

Web Browsers: Frequently visited web pages remain cached.

Database Query Caching: Frequently executed queries are kept in cache.

Operating Systems: Virtual memory paging systems use LRU to manage RAM efficiently.

Pros

✔ Effective for workloads with temporal locality—recently used data remains available.

✔ Self-adjusting—Adapts dynamically to access patterns.

Cons

✖ High overhead—Requires maintaining a linked list or hash map to track usage order.

✖ Not ideal for cyclic workloads—Items accessed at regular intervals may get evicted.

3 - Least Frequently Used (LFU) – Prioritizing Popular Items

How LFU Works

Each cached item is associated with a frequency counter.

When the cache is full, the least frequently accessed item is removed.

Best Use Cases

Content Delivery Networks (CDNs): Frequently accessed content remains cached.

E-commerce Product Listings: Popular products are cached for quick retrieval.

Machine Learning Caches: Keeps frequently used datasets in memory.

Pros

✔ Better long-term cache hit rates than LRU in stable workloads.

✔ Ensures popular items remain available, even if they haven't been accessed recently.

Cons

✖ More complex to implement—requires additional metadata to track usage frequency.

✖ Cold Start Issue—Newly added items start with a low count, leading to premature eviction.

4 - Most Recently Used (MRU) – Evicting Fresh Data First

How MRU Works

The most recently accessed item is evicted first.

MRU assumes that new data is less likely to be needed again soon.

Best Use Cases

Streaming Services: The last-played song or video might not be needed again soon.

Batch Processing Systems: Keeps older results in memory, discarding the newest.

Database Caching for Bulk Queries: Avoids keeping temporary recent queries in cache.

Pros

✔ Works well for workloads with minimal temporal locality.

✔ Efficient when older data is more valuable than recently accessed data.

Cons

✖ Counterintuitive behavior—recently accessed items get removed first.

✖ Less effective for general caching scenarios compared to LRU.

Example

A video player cache may use MRU to evict the last-played video first, ensuring older unwatched videos stay cached.

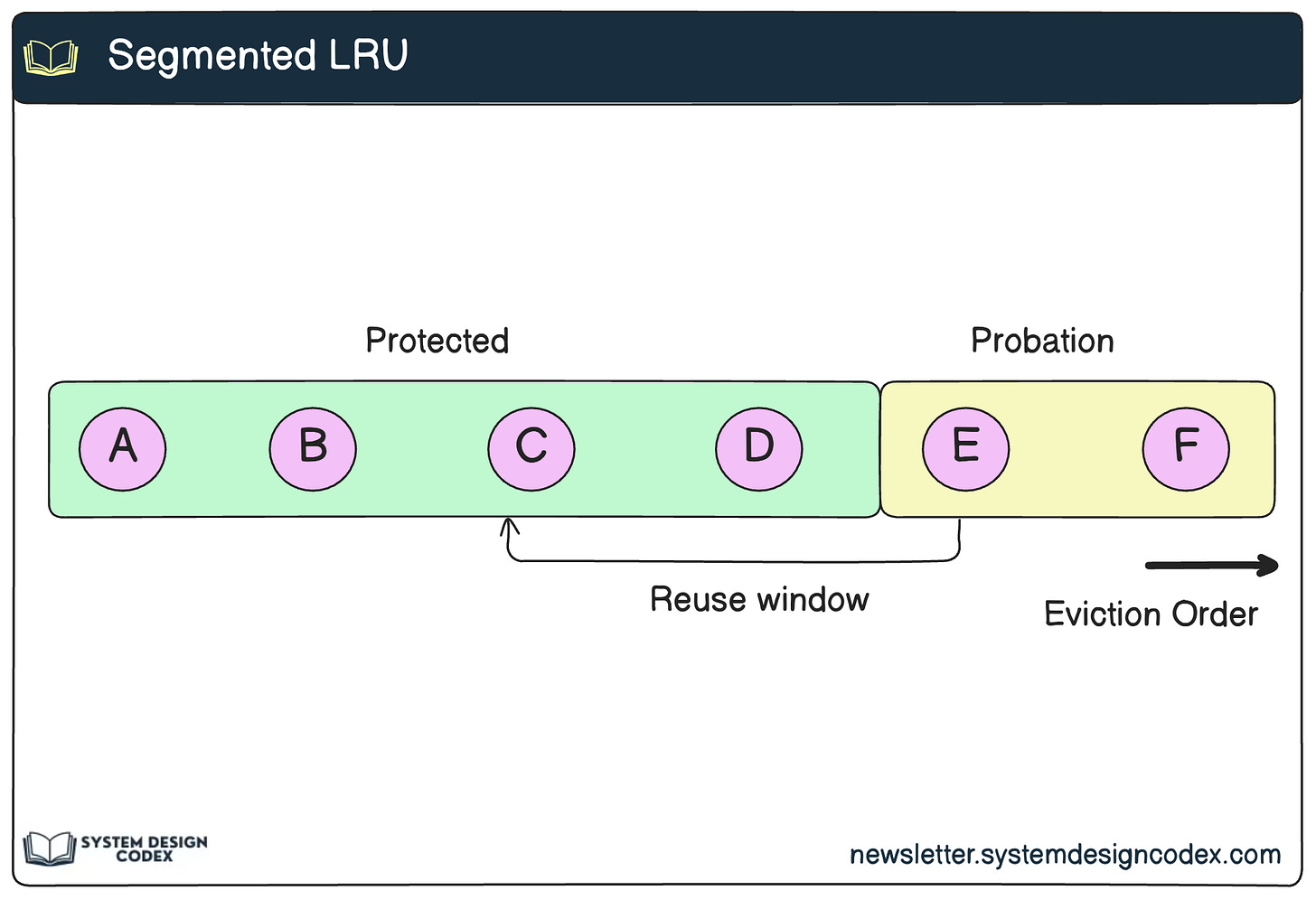

5 - Segmented LRU (SLRU) – A Hybrid Approach

How SLRU Works

The cache is split into two sections:

Probationary Segment: Stores newly added items.

Protected Segment: Stores frequently accessed items.

Items promoted from probationary → protected remain cached longer.

Best Use Cases

Database Caching: Ensures frequently used queries are prioritized.

Hybrid Cache for Dynamic Workloads: Adapts better than pure LRU or LFU.

Large-Scale Distributed Caches: Used in Memcached and Redis for efficient cache management.

Pros

✔ Balances LRU and LFU benefits.

✔ Minimizes frequent eviction of useful data.

Cons

✖ Requires tuning of segment sizes for optimal performance.

✖ Higher memory overhead than simple LRU/LFU.

So - will you add any other strategy as well?

Shoutout

Here are some interesting articles I’ve read recently:

Four Essential Steps To Take Before Making Any Technical Decision In 2025 by Petar Ivanov

I used to think testing in production was a bad joke. I was wrong by Raul Junco

12 Startups in 12 Months: The Challenge That Could Change Your Life in 2025 by Akos Komuves

My strategy to learn faster than anyone else: Copying from the best by Fran Soto

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues.

Old problem still problematic 🙂 great article Saurabh

It's important to know the basics! You should compile a short book from all these core concepts you write about in your newsletter. 🙂 Thanks for the mention!