8 Strategies for Reducing Latency

High latency can cost your system

Latency is the new downtime in distributed systems.

High latency can render an application effectively unusable, frustrating end-users and negatively impacting business outcomes just as much as a complete outage.

The frustration of a slow website is more catastrophic to modern-day users.

At the bare minimum, unavailable websites don’t waste a user’s time directly.

Considering this, it is wise for developers to invest in learning about low-latency strategies.

Here are the top 8 strategies to reduce latency that you must be aware of (in no particular order):

1 - Caching

Caching is a fundamental technique used in distributed systems to improve performance and reduce the load on backend services.

The primary goal of caching is to minimize costly database lookups and avoid performing high-latency computations repeatedly.

By storing frequently accessed data in a cache, applications can retrieve the data much faster compared to fetching it from the source, such as a database or a remote service.

Caches are typically implemented using high-speed memory or fast storage systems that provide low-latency access to the cached data.

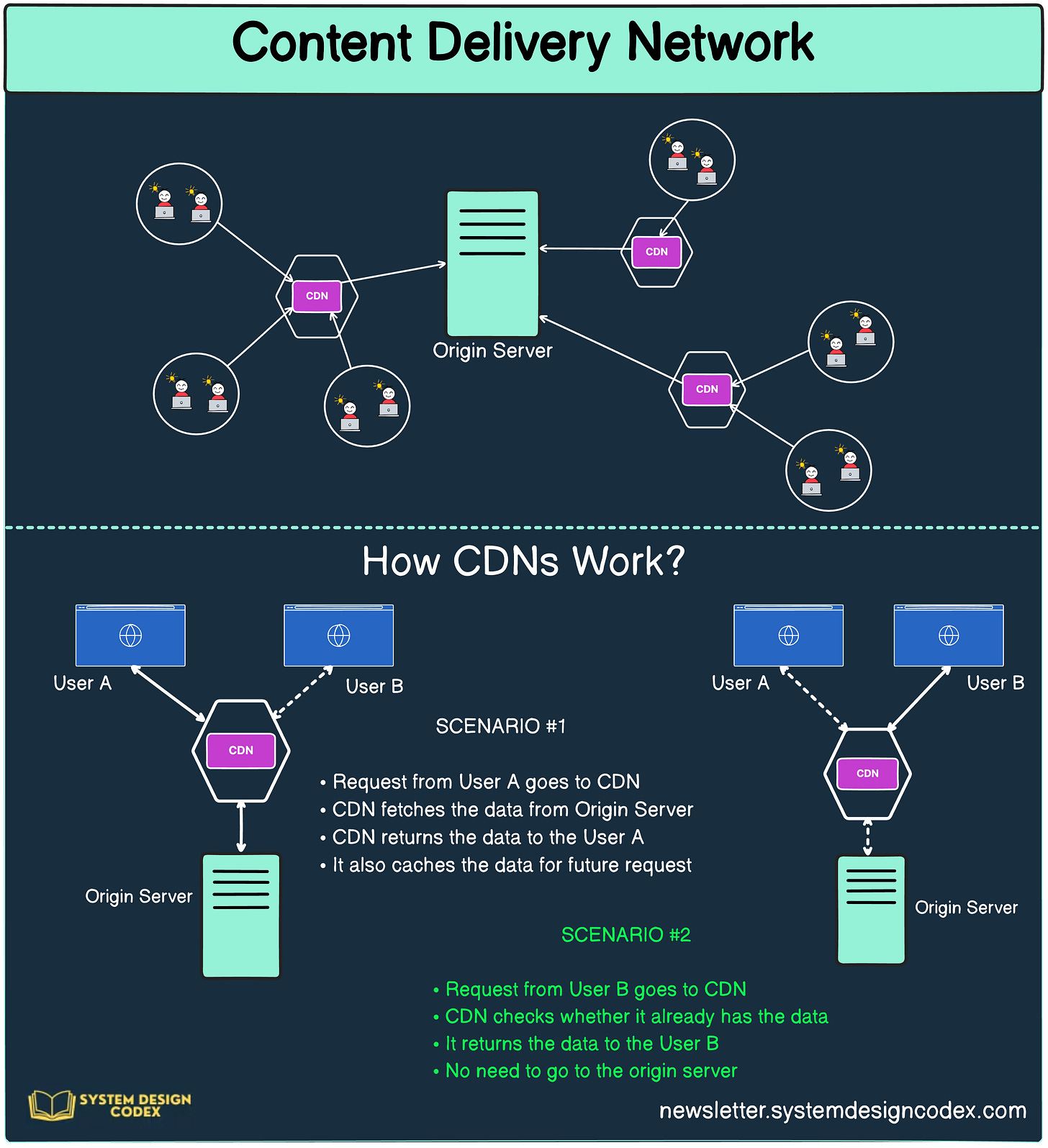

2 - Content Delivery Networks (CDN)

While caching is a powerful technique for improving performance and reducing the load on backend services, it can be taken to the next level by leveraging Content Delivery Networks (CDNs).

A CDN is a geographically distributed network of servers that work together to deliver content to end users with high availability and performance.

CDNs reduce latency by caching content closer to the end users.

When a user requests content from a website or application that utilizes a CDN, the request is redirected to the nearest CDN server instead of the origin server.

If the requested content is already cached on the CDN server, it is served directly from the cache, eliminating the need to retrieve it from the origin server. This reduces the distance the data has to travel and minimizes the response time.

3 - Load Balancing

Load Balancing is a super important technique to distribute workload between multiple machines.

By employing load balancers to distribute incoming traffic across multiple servers, we ensure that no single server becomes overwhelmed. In other words, the system performs according to the user's expectations.

When a client sends a request to a distributed system, the load balancer acts as the initial point of contact. It receives the incoming request and intelligently routes it to one of the available servers based on predefined algorithms or policies.

Common load-balancing algorithms include:

Round Robin

Least Connections

IP Hash

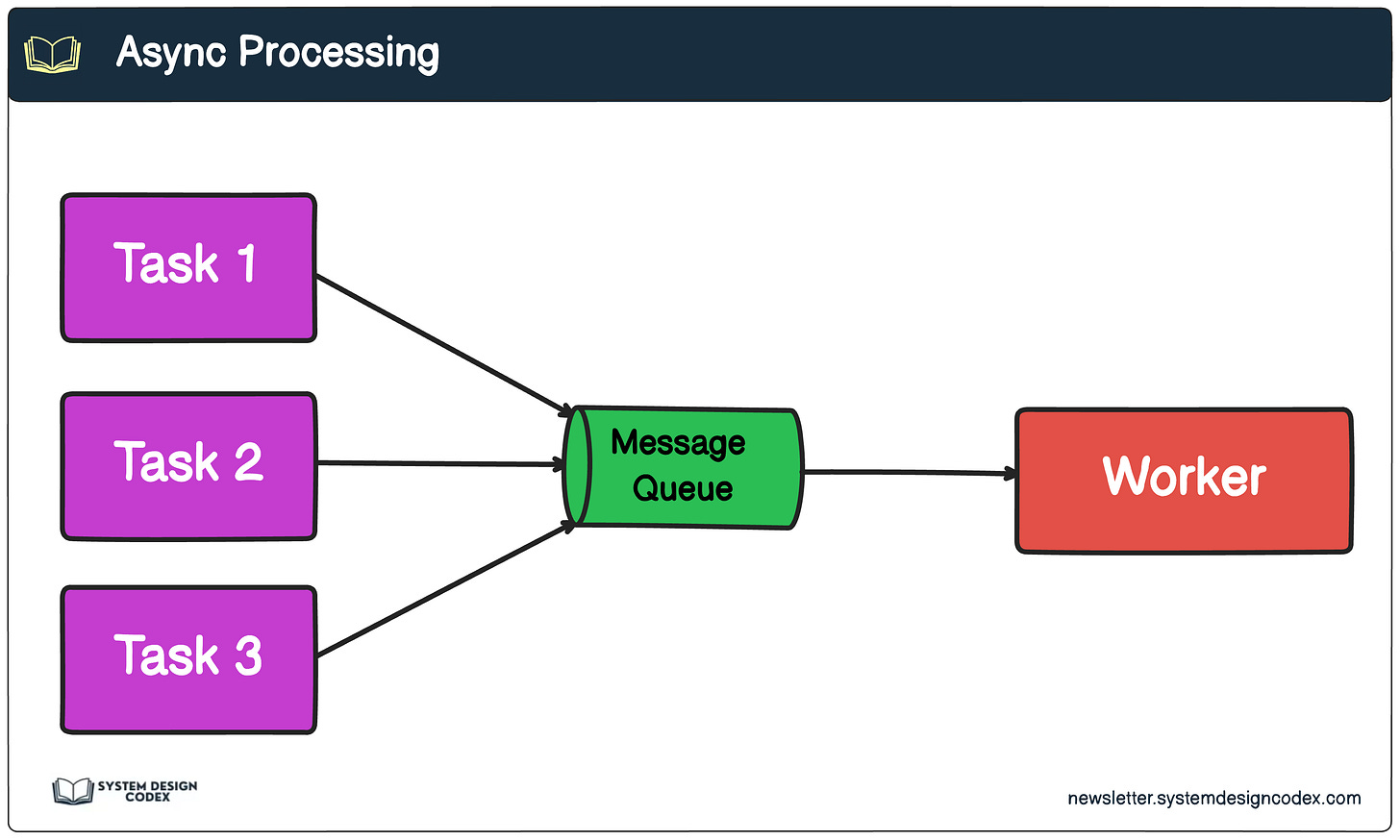

4 - Async Processing

When dealing with long-running tasks in a distributed system, it's important to consider the user experience and the overall system responsiveness.

Implementing asynchronous processing is a powerful technique that allows the system to respond quickly to the user while performing further processing in the background.

Here’s how it works:

In an asynchronous processing model, when a user initiates a long-running task, such as generating a complex report or processing a large dataset, the system immediately acknowledges the request and returns a response to the user.

The response indicates that the request has been received and is being processed.

Behind the scenes, the system enqueues the task into a message queue or a task queue.

A separate background worker process or a pool of worker processes continuously monitors this queue, picking up tasks as they become available.

The worker process executes the long-running task asynchronously, without blocking the main application thread or impacting the system's responsiveness.

5 - Database Indexing

Databases can be a huge source of latency if not designed optimally.

To ensure optimal database performance and faster query processing, it is crucial to implement the right indexes and identify and refactor slow database queries.

Indexes play a vital role in improving the efficiency of database queries.

By creating appropriate indexes on frequently accessed columns or combinations of columns, the database can quickly locate and retrieve the required data without scanning the entire table. This reduces the time and resources needed to process queries, especially for large datasets.

6 - Data Compression

When transmitting data over a network, especially in distributed systems, it's important to consider the size of the data being sent.

Larger data payloads can consume more network bandwidth, increase latency, and impact overall system performance.

To mitigate these issues, a common technique is to compress the data before sending it over the network.

Data compression involves reducing the size of the data by applying compression algorithms that remove redundancy and represent the data more efficiently. There are several benefits to compressing the data:

Reduced bandwidth usage

Improved latency

Efficient resource utilization

Cost savings

7 - Precaching

Pre-caching is a technique used to proactively cache data in anticipation of future requests.

The idea is to cache in advance data that has a high probability of being accessed so that you can serve it faster when requested by a user.

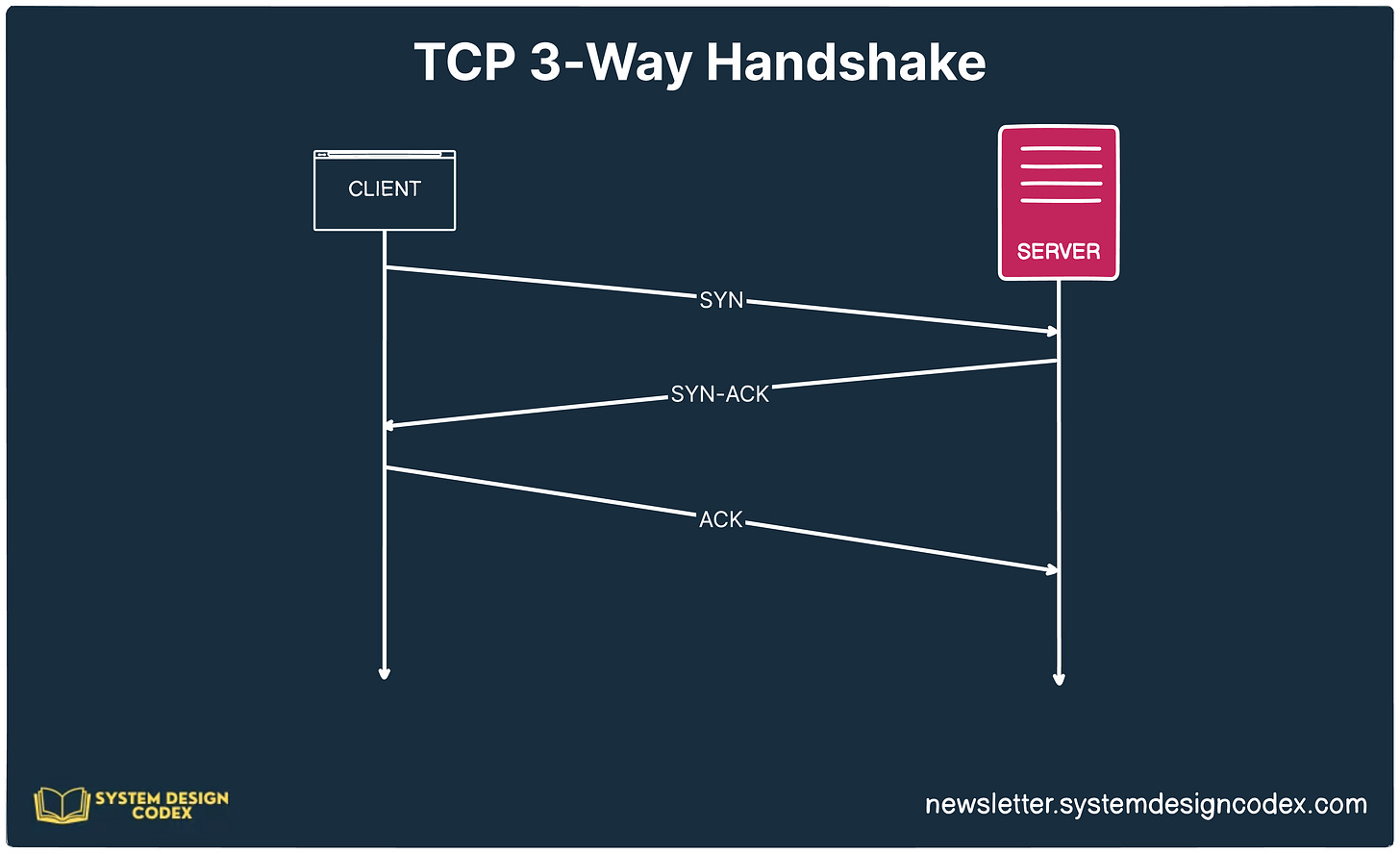

8 - Use Keep-Alive Connections

The TCP handshake is a critical process that establishes a reliable connection between two endpoints before data transmission can begin.

However, this process can be costly in terms of network latency and resource consumption, as it involves multiple round trips between the client and the server.

To optimize the overhead associated with establishing new TCP connections, it is recommended to reuse existing connections whenever possible.

Connection reuse, also known as connection pooling, is a technique that allows multiple requests to be sent over the same TCP connection, reducing the need to establish new connections for each request.

👉 So - which other low-latency techniques have you used?

Shoutout

Here are some interesting articles I’ve read recently:

How and Why Did I Get Certified as an AWS Solutions Architect Associate? by Petar Ivanov

Why I Struggled to Get to the Point? (3 reasons why people answer the wrong question) by Raviraj Achar

That’s it for today! ☀️

Enjoyed this issue of the newsletter?

Share with your friends and colleagues.

See you later with another edition — Saurabh

Well written article. Really great job with the Images/Demonstration.

It just makes it easier to understand things. Do add this to all your future posts. It's really helpful.

"Latency is the new downtime" one of my favorites quotes!

Thanks for the shoutout, Saurabh